Bishop Hill

Bishop Hill Wilson trending

Jan 14, 2016

Jan 14, 2016  Climate: MWP

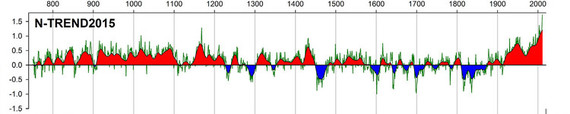

Climate: MWP Rob Wilson emails a copy of his new paper (£) in QSR, co-authored with, well, just about everybody in the dendro community. It's a tree-ring based temperature reconstruction of summer temperatures in the northern hemisphere, it's called N-TREND and excitingly it's a hockey stick!

I gather that Steve McIntyre is looking at it already, so I shall leave it to the expert to pronounce. But on a quick skim through the paper, there are some parts that are likely to prompt discussion. For example, I wonder how the data series were chosen. There is some explanation:

For N-TREND, rather than statistically screening all extant TR [tree ring] chronologies for a significant local temperature signal, we utilise mostly published TR temperature reconstructions (or chronologies used in published reconstructions) that start prior to 1750. This strategy explicitly incorporates the expert judgement the original authors used to derive the most robust reconstruction possible from the available data at that particular location.

But I'd like to know how the selection of chronologies used was made from the full range of those available. Or is this all of them?

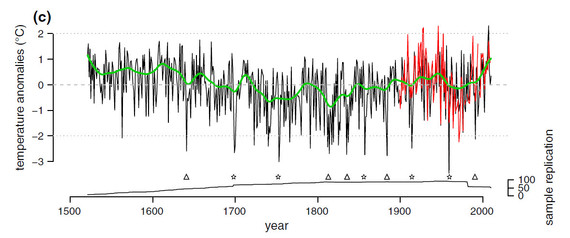

If so, there are not many - only 54 at peak. Moreover, the blade of the stick is only supported by a handful. On a whim, I picked one of these series at random - a reconstruction of summer temperatures for Mount Olympus by Klesse et al, which runs right up to 2011. Here's their graph:

You can see the uptick in recent years, but you can also see an equally warm a medieval warm period. This is only one of the constituent series of course, but I think it will be worth considering this in more detail as it does raise questions over the robustness of the NTREND hockey stick blade.

You can see the uptick in recent years, but you can also see an equally warm a medieval warm period. This is only one of the constituent series of course, but I think it will be worth considering this in more detail as it does raise questions over the robustness of the NTREND hockey stick blade.

Reader Comments (171)

Paul Homewood has now got a post up on his blog on this study and BH blog comments

https://notalotofpeopleknowthat.wordpress.com/2016/01/14/new-tree-ring-study-ignores-the-effect-of-co2/#comments

"It is well established that CO2 has a significant effect on plant growth, and accepted that tree ring studies can be skewed as a result.

It was therefore astonishing to find this comment from one of the study’s authors, the UEA’s Tim Osborn, on Bishop Hill:

5. CO2 fertilisation effects.

We don’t identify or remove such effects. The empirical evidence for sustained effects (i.e. over decades) on trees in cool, moist locations over long periods of time is scarce."

Just the point I made above. Why is the effect of man made CO2 not factored in? Inconvenient because it might "diffuse" the "hockey stick"? It would seem to me the "evidence" is scarce because the study of it is, well scarce.

2

quick comment to Steve Mac - yes - some ring-width records from D'Arrigo et al. 06 have been replaced with maximum density chronologies (or a fusion of RW and MXD). If you read recent literature, "divergence" at least in NW north America is certainly a significant problem in ring-width data in most locations but density parameters, a much "cleaner" proxy of summer temperature anyway, was used as it is simply a better proxy archive for what we're trying to do. That said, please read the paper carefully as there is certainly much debate w.r.t. the use of RW and/or MXD w.r.t. retention of high and low frequency variability.

For the rest - I will try and dip back later today to try and address some of the more sensible comments.

Oh - by the way - w.r.t. the number of co-authors - please Google the definition of "consortium".

I wouldn't say that it was 'well-established' that CO2 affects tree growth significantly at such low levels of mean increase. It's a postulation only. You just need to look at raw CO2 output to see that the variance throughout the day totally swamps the daily increase. In fact you'd be hard-pressed to notice any increase at all unless you are running the data through the numerical sausage machine at Mauna Loa. The level in greenhouses is far above atmospheric and accompanied by nutrients; the presence/absence of which is vital as CO2 experiments in the desert show. The demonstrable greening of the planet for the last 40 years is most likely due to the warming itself rather than CO2 because there has also been a plateau in the greening since 2000 as reported by Steve Running, using the MODIS satellite output.

I would invite Phil again to confirm why he dismisses interpolation from a climatically similar area a few hundred kilometres away, but accepts them for other temperature records with different climates from up to 1500 km?.

Where do you get 1500km from?

Interpolation across an area of sparse coverage is perfectly fine, as long as you weight the readings by distance from the origin. But you can hardly call the resulting product 'Central England'.

I haven't read the paper, only seen reference to it somewhere in the past.

Tree responses to rising CO2 in field experiments: implications for the future forest

Also this paper http://www.sciencedaily.com/releases/1998/08/980814065506.htm

No doubt whatsoever that there will be more recent research proving beyond all shadow of doubt that high levels of CO2 kill virtually all species of tree stone dead.

It's a bit of a concern that they refer to scientific consensus instead of scientific evidence don't you think?

Regards

Mailman

"No doubt whatsoever that there will be more recent research proving beyond all shadow of doubt that high levels of CO2 kill virtually all species of tree stone dead."

If you look at the Antarctic ice-cores it's clear that high levels of CO2 produce sudden cooling - well for anyone who thinks CO2 is a climate driver it should. Oh wait - they just ignore that inconvenient aspect of the data too, just like the contradictory Arctic ice-cores, plant stomata data and the geological record that all say there is no CO2-T correlation and just like they ignore modern-day Antarctic cooling, the pause in stratospheric cooling, the lack of surface ocean warming since accurate records were available, the model-versus-reality divergence and the lack of the predicted tropi-tropo hotspot. Ho-hum.

But I digress. Science marches on despite the blowhards and it now showsbeyond a shadow of a doubt that skeptics were correct in saying that there was a medieval warm period after all and that all those Mann-bots were wrong.

Let us return to science 101 , if the method of taken measurements is subject to unknown and/or not well understand issues. They any results are also subject to unknown and/or not well understand issues , no matter how much wishful thinking you use.

The idea that computing power can overcome your inability to take meaningful measurements is some what odd .

Here's the abstract of that paper I referred to above:

"Terrestrial net primary production (NPP) quantifies the amount of atmospheric carbon fixed by plants and accumulated as biomass. Previous studies have shown that climate constraints were relaxing with increasing temperature and solar radiation, allowing an upward trend in NPP from 1982 through 1999. The past decade (2000 to 2009) has been the warmest since instrumental measurements began, which could imply continued increases in NPP; however, our estimates suggest a reduction in the global NPP of 0.55 petagrams of carbon....."

Zhao, M. & Running, S. W. Science 329, 940-943

Of course maybe it's just a coincidence that NPP dropped (in fact it plateaus if we change the trend startpoint by just 1 year) during the temperature plateau of 2000->2009 or it could suggest that that the earlier growth was more due to warming than CO2 enrichment. It seems more persuasive to me than experiments with a sudden artificial enrichment of 300ppm that trees never seen in the wild. Sure a few percent CO2 enrichment perhaps but enough to engender a hockey-stick? Doubtful!

bill

"Mann with his head hung in shame"

That's too much for my imagination. I like 'Mannikins' though.. :-)

Aila

"Victoria's Secret models"

A lot more fun than the climate ones, I imagine. And at least their outfits consume very little in the way of carbon compounds.

Like Rob Wilson, I'll try to keep my comment brief as I imagine Steve McIntyre will write on this topic, and when he does, he'll likely cover many of the things I would point out. There seems little point in me bringing up specific details when he'll likely do a better job of it. I will, however, address the central issue I raised which Wilson addressed in his comment thusly;

While I can think of one or two data sets offhand that could be added under this criteria, I can think of many other data sets which could be included under a similar criteria that merely removed the restriction the data sets be the "latest PUBLISHED versions." Unfortunately, that criteria which Wilson states demonstrates the exact problem I expressed with this paper. Because the co-authors of the paper are the ones who created nearly all the data sets used in the paper, the co-authors of this paper are ultimately able to pick and choose which data sets to use by simply choosing which data sets to publish.

This doesn't have to involve any nefarious intent to be a serious issue. There are tons of decisions that are involved in making these data sets. Which data gets included in them, and how that data is handled, involves a lot of subjective decisions. This is well-demonstrated by the fact there are data sets in this paper where one could point to six or more different versions that could potentially be used. The "latest PUBLISHED version" may happen to be the one an author of this paper thinks is best, but simply relying on his opinion that it is the best makes for a poor criteria. I'm sure the authors have reasons for their opinions, but that doesn't change the fact this paper's results depend largely upon the opinions of its authors.

The lack of objective selection criteria means any potential mistakes and biases by these people can have an unknowable effect on its results. In the most mundane manner, a person may examine a number of potential data sets but only take the time to publish ones which seem to give good results. That's understandable. However, it means (some of the) agreement between the resulting data sets may not accurately reflect the real-world signals, but rather, the fact people had similar expectations. This is especially worrying given co-authors of this paper have said things like (Gordon Jacoby):

In the past. Even if this paper's results are unaffected by the thousands of subjective decisions its authors made while creating these data sets, there is simply no way to know that.

Brandon's above comment is entirely on point, as is his reference to Jacoby's notorious quotation. As a reminder to readers, Jacoby's quote arose in the following context. The 1990s vintage Jacoby-D'Arrigo reconstruction had been constructed by using the 10 most "temperature sensitive" chronologies from an inventory of 35 or so, plus the very HS Gaspe series from outside the region. I asked Jacoby for the data for the series that had been rejected. He refused and the series, to my knowledge, have never seen the light of day.

In simulations with series with high autocorrelation (as are Jacoby chronologies), picking the 10 most "temperature sensitive" of 35 series necessarily yields a HS-like series - a point discussed very early on at Climate Audit and independently reported over the years by David Stockwell, Lubos Motl, Jeff Id and Lucia, and discussed often by Willis Eschenbach. Correlation screening has the same effect. This point is pablum obvious to "skeptics" but not conceded by specialists.

The lack of concern of specialists over such issues means that problems are deeply embedded in dendro literature, which is accordingly compromised. As already noted, I've spot checked a few sites and immediately located this sort of problem.

Making chronologies is a difficult statistical problem and dealt with in a very unsatisfactory manner by the dendro community, who rely on ad hoc recipes rather than statistical procedures known to the rest of the world. But that's a long story for another occasion.

Hi – to those with an open mind at least

probably my last entry as I await with baited breath for Steve to weigh in to focus on issues that will ultimately not change the main conclusions and focus of the paper. For those who have not read the paper (you should do – it is here: https://ntrenddendro.wordpress.com/publications/), we make no grandiose claims (this is not a Science/Nature paper!) – the focus really is on the uncertainties and how we can improve on the current results.

A couple of specific points:

firstly, re. CO2 fertilisation effect

there is no evidence to suggest that tree growth is elevated w.r.t. CO2. Such an effect would result in an overall misfit in the calibration period (i.e. linear trend in the residual error) which we do not see.

I also urge those, who really are open minded and want to learn, to read the following paper:

Ljungqvist, F. C., P. J. Krusic, G. Brattström, and H. S. Sundqvist, 2012: Northern Hemisphere temperature patterns in the last 12 centuries. Climate of the Past, 8, 227-249.

http://www.clim-past.net/8/227/2012/cp-8-227-2012.pdf

Specifically, look at Figure 4a and think about the implications of that multi-proxy comparison w.r.t. CO2 fertilisation in tree-ring data.

Lastly, I don’t want to deflate Steve’s likely arguments and in-depth “wise” thoughts on proxy data choice, but, I must reiterate that we have used the latest updated versions of many of the records. Of course there are older versions, but only a fool would use an old version with less data or that had calibration issues etc. Would you rather drive a Trabant or a 2016 BMW series 1. Duh!

But importantly, Steve has rightly noticed that we (OK – ME – blame me) have not used certain records that were used in previous studies. Firstly, again, for those wanting to catch up with the background, I am specifically talking about two papers:

D'Arrigo, R., Wilson, R. and Jacoby, G. 2006. On the long-term context for late 20th century warming. (Journal of Geophysical Research, Vol. 111, D03103, doi:10.1029/2005JD006352

https://www.st-andrews.ac.uk/~rjsw/all%20pdfs/DArrigoetal2006a.pdf

Wilson, R., D’Arrigo. R., Buckley, B., Büntgen, U., Esper, J., Frank, D., Luckman, B., Payette, S. Vose, R. and Youngblut, D. 2007. A matter of divergence – tracking recent warming at hemispheric scales using tree-ring data. JGR - Atmospheres. VOL. 112, D17103, doi:10.1029/2006JD008318

https://www.st-andrews.ac.uk/~rjsw/all%20pdfs/Wilsonetal2007b.pdf

I have taken all the data from these two papers that were NOT included in N-TREND 2015 and using both independent data-sets, made a simple hemispheric mean (no weighting between continents etc) and have only stabilised the variance as N changes through time and show them at the link below. The composite mean series are shown as z-scores (standard deviations) w.r.t. 1400-1750. There are of course differences in the pre 1200 period as replication is much greater in N-TREND, but there are also differences in the 20th century which reflect the improved calibration fidelity of the updated (and new) records used in N-TREND2015.

https://www.st-andrews.ac.uk/~rjsw/N-TREND/unuseddata.jpg

not much more to say I think

Rob

Unfortunately not having the will to fathom this paper's particular reconstruction methodology, this comment may not actually apply. But it is evident in general that a well formulated method for temperature reconstruction from proxies requires an hypothesis about proxy as function of temperature. This has then to be inverted, after calibration, in order to reconstruct historical temperatures from proxy data. While this may be an obvious requirement, the few papers of this kind I have looked at actually do not have well formulated functional hypotheses, but rather ad hoc proxy reconstructions. Who knows, these may work, but not in a systematic way. Say what you like about MBH98 (and many have!), that paper did at least include a wf model of proxy as function of temperature and how it was being inverted.

Rob Wilson

"...I await with baited breath for Steve to weigh in to focus on issues that will ultimately not change the main conclusions and focus of the paper"

"...I don’t want to deflate Steve’s likely arguments and in-depth “wise” thoughts..."

Quite arrogant. I wonder why do you come to a place like this for criticism and debate if that's what you think of Steve's "wise" thoughts?

Willis Eschenbach

"He's one of the good guys"

Sven said:

Rob, truly, I would not recommend that approach. That's like showing a red flag to a bull ... anyone who thinks that Steve McIntyre focuses on "issues that will ultimately not change the main conclusions and focus of the paper" is whistling past the graveyard.

He's here answering questions at least, which is more than you can say for 99% of the mainstream, so I'll give him props.

w.

Tiny point to Rob Wilson: the expression is bated breath rather than baited breath. Sometimes written as 'bated as in abated. And often used as a whatchamacallit - As in '.. I won't hold my breath waiting on you..".as reverse of 'I will withhold my breath'..wish my brain would function. If anyone reads this and can remember the term, pls put me out of misery.

Hmm, which bait would one chose? Would it vary according to circumstance?

I understand Steve McIntyre's point (bad chronologies cuz they are not dealt with according to stats standards). But I do not understand Rob 's point " ...to focus on issues that will ultimately not change the main conclusions and focus of the paper...." How do you answer the question: If the chronologies are problematic how can they not fail to change the conclusions of the paper? Are you saying that you have used these statical procedures in this paper?

This is a genuine query. Many thanks.

Willis,

sorry - if you knew me - arrogance is not really the correct term - a weary flippancy maybe. From years of minimal interaction with CA and BH, I see constant blog comments from people who are NOT willing to engage with the relevant literature. Many of the issues raised here have been examined and there are PLENTY of papers out there (if you cannot access them - e-mail the authors).

I am not prescient as to how Steve will respond to the work (maybe he wont), I know that the current N-TREND network is a substantial improvement on DWJ06, but there are problems, as detailed in the paper.

have a good weekend all

signing off

I thought he was doing quite well, up to that point. Relatively speaking.

Rob Wilson

Did you look at the correlation between summer and annual mean temperatures for the different sites?

.

“Correlation screening has the same effect. This point is pablum obvious to "skeptics" but not conceded by specialists …”.

===============================

It’s circular reasoning plain and simple as Willis Eschenbach so clearly explained (10:14 PM).

Enough of the tree-house kiddy hate notation please - can we have (a) the correct spelling of sceptics, and (b) the correct use of quotation marks; i.e. "specialists" / "scientists" - unless of course evidence for the unquoted form is available.

I await with bated breath...

I consider it unfortunate Rob Wilson has chosen an obnoxious approach. The issue I raised is an abundantly simple one. A paper whose results depend upon many subjective decisions made by its authors is one people can reasonably be skeptical of. Even if the authors are confident this concern couldn't affect their results, there can be no doubt it is an issue which should affect people's perception of those results. Responding to people who point out this unremarkable idea with obnoxiousness can only serve to enhance their skepticism. Remarks like this:

May seem particularly mendacious to people acquainted with the history of paleoreconstructions given Rob Wilson knows fully well any number of his co-authors have done exactly what he derides people for supposedly suggesting (leaving aside whether anyone actually suggested such). Wilson is perfectly aware many updated data sets have, at various points, languished in obscurity as older, outdated ones were used instead due to them giving more favorable results.

Though perhaps that was intentional, with the irony meant as a subtle slam against behavior from his co-authors in the past he has known to be wrong and is not please about. I don't know. What I do know is his comment makes the troubling remark:

Anyone who has followed the hockey stick debates as long as I have will undoubtedly recognize quite a few proxies mentioned in that paper and realize the authors of the paper made no attempt to verify their data was suitable for paleoclimate reconstructions. That would be why proxies like Tiljander show up in it, even though everyone and their uncle should know by now the modern portions of the Tiljander proxies were distorted by non-natural influences.

That should have made the proxy completely unusable for the authors given they required their proxies have data for the modern period. It didn't. It didn't because they used the same sort of subjective approach to their work as Wilson and his co-authors have done. They used whatever data they found "expert opinion" said was suitable, making no effort to verify that opinion was correct. As a result, they ignored many well-known and well-documented problems with the data they used, to the point they included data which was indisputably inappropriate for their work.

That Wilson promotes this work, suggesting its results should allay people's concerns, is troubling as this paper is a great example of what's wrong with not using objective selection criteria. As it demonstrates, bad data can be used and reused over and over while other, potentially good data, is ignored simply because people chose not to publish papers about it.

The average person would have no problem understanding why that is a recipe for disaster. It's unclear why Wilson would have trouble figuring it out.

I'm not put off by Rob's comment, so no one needs be put off on my behalf.

To the extent that specialists in the community have been blind/obtuse to previous lengthy commentary on the perils of data snooping and ex post screening, I doubt that anything will change their practices. So Rob is undoubtedly correct in saying that any amount of criticism about data snooping and ex post data torture will change anyone's minds. But we labor on nonetheless.

So data snoop, post data torque;

The flippant just don't give a fork

When every cake so buttercupped

Lands fork ready, butter side up.

=======================

I must admit that I find this criticism of Rob Wilson's tone fascinating. Come on, the tone of this site and the typical comments is appalling. All sorts of accusations - implied and explicit - of fraud, misconduct, scientists being dishonest. Any paper that doesn't suit the narrative is typically presented as having some kind of issue. The comments range from outright science denial to barely disguised science denial.

Here you have two authors of the paper actually engaging and making some interesting comments and explaining some of the aspects of their paper, and people are complaining about the tone. Not only did the tone seem perfectly fine to me (although, this may be partly because my expectations aren't particularly high these days) but what do people here really expect? I'm somewhat amazed that the authors of papers actually bother in the first place, and even more amazed that, typically, they respond as reasonably as they do. At the end of the day, you get what you deserve and - IMO - you get, here, much much better than you deserve.

I completely agree with ATTP here

It is to Rob Wilson's credit that he stays involved here, and some of the comments do not encourange him to stay. I am particulaly impressed that Rob simply ingores most of the critical comments rather than getting steamed up about them. Many other scientists who have ventured onto scepical sites such as here and CA respond to the noise and ignore the substantive points put to them, before finally disappearing.

For the sake of it, I went and followed some of the links that Rob Wilson put up which actually lead to Bradley's book "Paleoclimatology: Reconstructing Climates of the Quaternary" (1999). In chapter 10 he deals with Dendrochronology, especially pp 414.

Multivariate analysis is performed on local climate data to try and determine the relationship for tree ring width. Now some people may say what's wrong with that, it sounds reasonable? The problem is that before you perform a statistical analysis on data you need to make sure the uncertainty in the data itself is characterised and known. You need to know how the climate relevant data was taken and how rigorously it was taken.

If you assume that individual samples points have uncertainty distributions that are "normal" you have actually slipped into the realm of theory. To make the results useful in the real world you need to watch things like this. Often uncertainty (or error) bars get increased because it is just not feasible to characterise datum errors. It just isn't possible to use the argument that the errors will decrease with more samples.

The second thing to note is that this is another example of the chain of references i.e. my paper is valid because the reference is valid. That reference is valid because the reference it uses is valid. But that reference is only valid if you assume A, B and C. And A, B and C are not visible at all the whoever reads the more recent paper. It's easy to dig down if you want to find the source of it all (I have had to do this quite a few times in my career) but often this is overlooked.

I've seen this happen in other scientific fields including physics and even thruster development. It also occurs in the Met Office bucket corrections where they reference a 20+ year old experiment rather than continually re-characterise and minimise the use of assumptions. The chain of references is implicitly based on the idea of trusting the past without repeating or at least critically assessing the work.

This is all common sense to anyone who has to do some sort of metrology and especially if it has consequences in the real world. So this reconstruction makes for an interesting theory paper, and even as a way to describe discipline in fields such as dendrochronology.

But it has no place in any official policy document or cannot be used as a source to claim anything about past or current temperature variations.

ATTP

The post starts with "Rob Wilson emails a copy of his new paper". That means that Rob Wilson himself gave the paper out here for criticism and debate so he should not be such a princess.

And looking at the "tone" at your site (including your own) neither should you be the one to be talking of any tone on other sites.

Rob Wilson

"...if you knew me - arrogance is not really the correct term - a weary flippancy maybe. From years of minimal interaction with CA and BH, I see constant blog comments from people who are NOT willing to engage with the relevant literature. "

Sorry, man, but this is a lame excuse as your "weary flippancy" was empty snark about Steve whom you should know better, not those "people"

ATTP:

Says the man who called Richard Tol a liar on this site and then, when called on it claimed that all he said was that Tol was stating things he knew not to be true.I hope you have two mirrors in your bathroom, Rice; one for each face.

Rob:

I appreciate and admire your willingness to engage here. Did anyone think to get Steve McIntyre involved in the writing of the paper?

Heh, bernie, that would have been bad for careers, dontcha know.

==========

Instead, it appears the whole dendroclimatology community has spent a decade exploring how data snooping and statistical chicanery can preserve the alarm, by pretending to pull sense from nonsense. They've fooled a lot of people, but first they had to fool themselves, and what a waste these fools have made.

=====================

This site appears not to allow for comment indenting to track responses to previous comments. So, regarding the comment by Steve McIntyre, Jan 15, 2016 at 3:16 PM:

I'm not particularly interested in defending the Jacoby etal work he is referring to (Climatic Change 14:39-59, 1989), because it has some issues, the unavailability of the raw data used being the main one, and methodological questions. I could raise a number of issues, but they're not the ones he raises.

However, the characterization of the criterion Jacoby used to choose his 11 series, is wrong. He did NOT choose "the 10 most "temperature sensitive" chronologies from an inventory of 35 or so, plus the very HS Gaspe series..." Jacoby and D'Arrigo are instead clear that they chose series by this criterion: "The eleven chronologies were selected as those with the best common variance in the rednoise analyses." This "rednoise analyses" refers to series in which the temporal autocorrelation had been retained, after ARSTAN detrending, that is, the longer term component of the rings series' variance. Furthermore, earlier in the paper they expand somewhat: "Selection criteria include strong common ring-width variation for both low and high frequency [variation] for a site, and the physiographic site characteristics." This common variance comes from presumably the minimumn standard practice of two cores per tree from at least ten trees, at least. So...it's clear that they chose chronologies based mainly on their common variance, along with judgments having to do with site factors, i.e. on the fact that south facing exposures showed the greatest common, longer term, variance, of the sites they sampled, combined with the avoidance of sites that showed evidence of moisture stress.

They then computed three principal components (PCs) from the collection of sites, for each of three target years (target year and the +/- one-year lag therefrom). The estimated relationship to T comes then in the NEXT step, that is, regression of the 5 PCs explaining the most variance, on temperature.

Temperature, and it's correlation to ring widths, had nothing to do with the choice of site chronologies used.

Steve McIntyre further states: "In simulations with series with high autocorrelation (as are Jacoby chronologies), picking the 10 most "temperature sensitive" of 35 series necessarily yields a HS-like series - a point discussed very early on at Climate Audit and independently reported over the years..." etc etc.

NO.

Jacoby etal present no data on the AC of their series, so we don't how high it is. What they DO present however, is the results of simulation (possibly the first to do so, not sure) in which they created random T series with the same AC levels as the North American T data they employed, and then tested by Monte Carlo, how often they would achieve the R-squared magnitudes they observed in their six regression models: "...we also tested for the probability of producing the regression results by chance alone. Monte Carlo techniques were used to produce random-number time series with the same autoregressive properties as the 'real' tree-ring reconstructed temperature data. Regressions were then performed with the same number of variables, leads and lags (based on the first and second eigenvectors) as the actual regressions to estimate temperatures. 500 simulation runs were made of each regression. The results indicate less than a 5% chance of producing the actual regression R [squared] results in 5 of the 6 regressions shown in Table III. The chance for producing joint occurrences of the 'significant' random R[-squares] and verifications for the 4 calibration-verification results is much less."

This is the very key point here, as it runs squarely in the face of all these dubious arguments amassed over the years regarding spurious correlations and hockey sticks due to AC in the time series used. It's not in fact that difficult to show, that given the results of most studies, the same basic finding of Jacoby etal would hold true there as well.

"....[D]ata snooping and statistical chicanery can preserve the alarm, by pretending to pull sense from nonsense. They've fooled a lot of people, but first they had to fool themselves, and what a waste these fools have made." --kim

These are not necessarily conscious processes. Perhaps, "appearing to pull sense from nonsense" is more to the point. There's a wide spectrum of both ability and honesty in dildoclimatology.

OK, I averaged all 54 data series from 1710 to 1988. The period for which they all have data. Then I plotted the results without any statistical nonsense. Here is the result. As can be seen temperatures have been rising steadily since the early 1800’s, long before CO2 was an issue.

This is VERY STRONG evidence that CO2 is not the cause of the current warming!!

http://oi67.tinypic.com/14b6g6d.jpg

To this statistician, who needs to be reminded frequently of the meanings of 'mean', 'median', and 'mode', the key point is: 'Jacoby et al present no data on the autocorrelation of their series, so we don't know how high it is'. So you ask us to trust their chicanery? I will let better statisticians tell me whether or not this is important, but I'm not going to listen to a committed, career, dendroclimatologist on this matter.

Sorry, Jim B., though I know that you are, relatively speaking, one of the good guys, but the dendroclimatology crew lost all hope of future credibility when they rallied around the Piltdown Mann's Crook't Stick a decade ago instead of cutting the dross and addressing the criticism. The decade since has seen ridiculous efforts to support the alarm, and few efforts to address the critiques. I wouldn't say it's too late to change your fates, but I've seen precious little effort so far.

=======================

I think folk are talking past each other. Screening for a temperature signal locally or regionally is good science - otherwise you don't have a proxy for temperature but maybe for something entirely different. It was screening by the 'global' temperature record by stats routines that could not recognise anomalies even to neighbouring trees, never mind the local temperature that is the bad science data-snooping issue.

Jim Bouldin, I will leave Steve McIntyre to answer for himself on your comment, but it did get me interested in what "the best common variance in the rednoise analyses" was slecting for. Unfortunately I can't see a non-paywalled version of the paper on line, but there are a number of documents setting out the capabilities of ARSTAN in detrending.

To confidently state that the selection criteria was not biasing the results one would need to know what was the stated purpose of the detrending, which options were chosen, how was the common variance established, and was the hypothesis that this preprocessing was actually dealing with the stated problem (and could it taint the results). ARSTAN gives considerable flexibility to modify the variance of the series (even if the stated aim is to remove exogenous factors), so care needs to be taken.

Can you help fill in some of the gaps?

Sorry should be "hypothesis tested".

Rob Wilson,

How is it that NTrend no longer illustrates a divergence problem that was exhibited in nearly every TR data set.? Neither D'Arrigo or Schneider show the abrupt uptick in temperature in the last decades. It seems inconceivable that any new data set could overwhelm previous studies to create the new abrupt warming. Furthermore the decades of 1160, 1950, 2000 exhibit no statistically significant difference. That suggests that whatever data sets caused rapid rise in the most recent decades of NTrend has also added great uncertainty.

In your 2007 paper you wrote, "“No current tree ring based reconstruction of extratropical Northern Hemisphere temperatures that extends into the 1990s captures the full range of late 20th century warming observed in the instrumental record.” Now NTrend corresponds to tha instrumental data. Were some/most data sets exhibiting divergence eliminated?

Your 2016 paper states the new chronology uses "temperature-sensitive" tree rings. Part of that selection process was eliminating data sets from below 40 degrees North. Were other data sets exhibiting divergence eliminated due to suggestions that those trees "became temperature insensitive" since 1950

Screening for a temperature signal locally or regionally is good science - otherwise you don't have a proxy for temperature but maybe for something entirely different.

=========================

not correct. Screening or calibration as it is known in climate science is well recognized in the other soft sciences as "selecting on the dependent variable". Google it. It is forbidden in statistics because it gives spurious correlations. All sorts of nonsense in Medicine, Economics and Psychiatry is now recognized to be caused by this problem.

The problem is that it is counter-intuitive. We think we are making the sample "better" but we are not, because hidden in the sample are false positives. Trees that appear to be responding to temperature, but are in fact responding to chance combinations of other factors that mimic temperature. This causes us to over estimate the reliability of our findings.

If someone says they have calibrated the tree rings, throw the paper out. 9 times out of 10 the findings will be rubbish.

ferdberple. I was being somewhat more polite. It seems strange that people who clean up their data in the name of removing what they regard to be non-temperature data don't realise that at that point they have introduced a view on the temperature into their analysis.

I was being polite when I said "9 times out of 10 the findings will be rubbish." The reality is more like 99 out of 100.

HAS (Jan 16, 2016 at 10:22 PM):

I much like your question--it indicates that you know enough about this whole topic to ask a good one.

There is no question that removing the non-climatic trend is big-time troublesome, at least when ring widths are the response variable. ARSTAN will detrend a ring series in several different ways--Jacoby etal 1989 used mainly negative exponential and linear fits. In doing so, they were trying to avoid removing any long-term climatic trends from the data. But such curve fits in no way guarantee that the non-climatic trend is properly removed, even apart from issues like CO2 fertilization and other possible drivers, which complicate the problem even further.

This reality is what spawned the renewed interest in an old method (RCS detrending) that Keith Briffa sort of brought to everyone's attention a few years after this paper (early 1990s), and which was unquestionably a step forward analytically, but except for a few cases, not in actual practice. The problems with RCS detrending, which are by no means obvious, but still understood by some, are a big part of my 14 part "Severe analytical problems..." series which are all linked to at this page: https://ecologicallyoriented.wordpress.com/archive-science-only/

What Jacoby etal were attempting was to select sites based on the highest long term coherence in growth rates between the sampled trees at a site. For this they deserve a lot of credit IMO, because coherence between trees is often thought of, and measured as, a short term (yearly) phenomenon, that is, using year to year variation. However, it's also not clear to me exactly how they went about so doing, because well, they don't explain it really, and the devil of course is often in the details. So, if your question is along the lines of "how do we know how well they really had sites with the best long-term growth rate coherence", I agree, that's a legitimate question--and the answer is we don't. But we do know that it had to be some type of comparison of long term growth trends because that's the only way to proceed. We also know that once this procedure was done, they found a strong relationship between the first few axes of RW variation *between* sites (principal components), and temperature, one that had a very low probability of occurring by random chance, given the autocorrelation levels in the actual T data. It is therefore entirely justifiable to conclude that the common response shown by the ring widths, is due to some driver operating at large spatial scales (T) even if yes, there might indeed be some common CO2 fert (and other non-climatic) effects being subsumed therein.

It appears to me that the paper is downloadable as a .pdf file straight from Springer, at least it is for me: http://link.springer.com/article/10.1007%2FBF00140174?LI=true

I don’t get it, merely as one of the mugs who are paying through the nose for carbon-based energy as a result of the current hysteria.

I don’t understand why screening for a temperature signal is “good science”.

Either trees generally are reliable thermometers or they’re not; it’s utterly implausible that some trees are and some trees are not. The local conditions at time of sampling are irrelevant.

If they are reliable proxies then screening is unnecessary, if not then the whole exercise is pointless.

In the latter circumstance screening is very bad science aimed at obtaining a fraudulent result.

Jim Bouldin, having glanced at your blog I'd suggest one helpful way for you to reframe the problem is to stop thinking about it as "removing the non-climatic trend" (or more generally "non-climatic variability"). This suggests a simplifying deterministic solution is available to what is a probabilistic problem.

When you remove trends/variability you are assuming you can do so independently of the rest of the system and (I suspect) that the uncertainty in the removed parameters can be ignored. So "removing" trends etc throws away information about best estimates (if the requirement for independence isn't met) while artificially reducing uncertainty.

So in the end you do need a hypothetical model for tree growth that brings in all other factors of significance, and (assuming the relationship with temp is significant and demonstrated) there are a number of experiments (predicated on the models) that this can be used when faced with sets of tree cores, out of sample. A significant limitation will be the lack of information about the other parameters, but as you note simulations can give some information despite this. (If some of the cores at a site are going to be used to generate estimates of these then the sub-samples need to be independent of each other).

But the important point is that the model is fitted warts and all to all cores from all trees that it has been validated for. In that way lack of independence is able to be tested and accommodated, and the uncertainty in the whole system preserved.

If the other variables swamp the temp parameter in some cores from an area, so be it, that is important information.

I should add that my understanding is that tree cores have a reasonably poor track record when compared with other proxies/instrumental records. And perhaps, if that's the case, that is all we can say with our current state of knowledge. You can't create information out of thin air.

If they are reliable proxies then screening is unnecessary, if not then the whole exercise is pointless.

=======================================

exactly. you cannot screen tree rings using temperature data and then hope to use them as a statistical proxy for temperature. this is forbidden in mathematics.

consider this. you are trying to solve:

temperature = function (tree rings)

but when you filter based on temperature you change this to:

temperature = function (tree rings, temperature)

and statistics solves this as:

temperature = 0 * tree rings + 1 * temperature

so, what you have done is to make the tree rings irrelevant. it doesn't make the slightest difference if they are good proxies or not, your conclusions will be exactly the same.

and because of this, the social sciences are full of bogus conclusions, because the statistical analysis was not drawn from random samples, but rather samples that were selected based upon the variable the researchers were hoping to study.

https://www2.warwick.ac.uk/fac/soc/economics/staff/vetroeger/publications/bjps_pluemper_troeger_neumayer.pdf

pg5

In addition, algorithms that exclusively or partly use information on the

dependent variable perform far worse than algorithms that refrain from doing so.

On average, the worst performing algorithm, which selects on the dependent

variable with no regard to variation of the variable of interest or of the control

variable, is roughly 50 times less reliable than the best performing algorithms.