Bishop Hill

Bishop Hill ECS with Otto

May 20, 2013

May 20, 2013  Climate: sensitivity

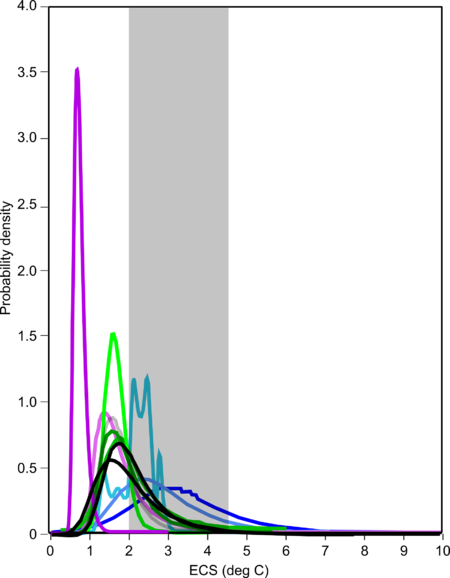

Climate: sensitivity Further to the last posting, and in particular the claim in the BBC article that the 2-4.5 range is largely unaffected by the Otto et al paper, here's my graph of ECS curves with the incorporation of the Otto et al results - both the full-range and the last-decade curves. These are shown in black. As previously, the other studies are coloured purple for satellite period estimates, green for instrumental, and blue for paleoestimates. The grey band is simultaneously the IPCC's preferred range and the range of the climate models.

As you will see, it is fairly clear that the Otto et al results slot in quite nicely alongside the other recent low-sensitivity findings, with most of the density outside the range of the models. The IPCC's preferred range looks increasingly untenable.

Reader Comments (40)

No disrespect intended to Nic Lewis, I consider all the claims regarding the ability to assess climate sensitivity disingenuous, even bordering on the dishonest.

It may be possible to calculate how CO2 behaves in laboratory conditions and hence to calculate a theoretical warming in relation to increasing CO2 levels in laboratory conditions. But that is not the real world.

In the real world, increased concentrations of CO2 would theoretically block a certain proportion of incoming solar insolation so that less solar radiance is absorbed by the ground and oceans, and it would also increase the rate of out going radiation at TOA. Both of these are potentially cooling factors. Thus the first issue is whether in real world conditions the theoretical laboratory ‘heat trapping’ effect of CO2 exceeds the ‘cooling’ effects of CO2 blocking incoming solar irradiance and increasing radiation at TOA and if so, by how much? The second issue is far more complex, namely the inter-relationship with other gases in the atmosphere, whether it is swamped by the hydrological cycle, and what effect it may have on the rate of convection at various altitudes and/or whether convection effectively outstrips any ‘heat trapping’ effect of CO2 carrying the warmer air away and upwards to the upper atmosphere where the ‘heat’ is radiated to space. None of those issues can be assessed in the laboratory, and can only be considered in real world conditions by way of empirical observational data.

The problem with making an assessment based upon observational data is that it is a hapless task since the data sets are either too short and/or have been horribly bastardised by endless adjustments, siting issues, station drop outs and polluted by UHI and/or we do not have accurate data on aerosol emissions and/or upon clouds. Quite simply data sets of sufficiently high quality do not exist, and therefore as a matter of fact no worthwhile assessment can be made..

The nub of the issue is that it is simply impossible to determine a value for climate sensitivity from observation data until absolutely everything is known and understood about natural variation, what its various constituent components are, the forcings of each and every individual component and whether the individual component concerned operates positively or negatively, and the upper and lower bounds of the forcings associated with each and every one of its constituent components.

This is logically and necessarily the position, since until one can look at the data set (thermometer or proxy) and identify the extent of each change in the data set and say with certainty to what extent, if any, that change was (or was not) brought about by natural variation, one cannot extract the signal of climate sensitivity from the noise of natural variation.

I seem to recall that one of the Team recognised the problem and at one time observed “”Quantifying climate sensitivity from real world data cannot even be done using present-day data, including satellite data. If you think that one

could do better with paleo data, then you’re fooling yourself. This is

fine, but there is no need to try to fool others by making extravagant

claims.”

We do not know whether at this stage of the Holocene adding more CO2 does anything, or, if it does, whether it warms or cools the atmosphere (or for that matter the oceans). Anyone who claims that they know and/or can properly assess the effect of CO2 in real world conditions is being disengenuous.

For what it is worth, 33 years worth of satellite data (which shows that temperatures were essentially flat between 1979 and 1997 and between 1999 to date and demonstrates no correlation between CO2 and temperature) suggests that the climate sensitivity to CO2 is so low that it is indistinguishable from zero. But that observation should be viewed with caution since it is based upon a very short data set, and we do not have sufficient data on aerosols or clouds to enable a firm conclusion to be drawn.

Further to my above post, in which I suggest that it is impossible to assess climate sensitivity until we fully understand natural variation, consider a few examples from the thermometer record (Hadcrut 4) before the rapid increase in manmade CO2 emissions.

1. Between 1877 and 1878, the temperature anomaly change is positive 1.1degC (from -0.7 to +0.4C). To produce that change requires a massive forcing. That change was not caused by increased CO2 emissions, nor by reduced aerosol emissions. May be it was an El Nino year (I have not checked but no doubt Bob may clarify) but we need to be able to explain what forcings were in play that brought about that change, because those forcings may operate at other times (to more or less extent).

2. Between 1853 and 1862 temperatures fell by about 0.6degC. What caused this change? Presumably it was not an increase in aerosol emissions. So what natural forcings were in play? Again one can see a similar cooling trend between about 1880 and about 1890, which may to some extent have been caused by Krakatoa, but if so what would the temperature have been but for Krakatoa?

3. Between about 1861 to 1869 there was an increase in temperatures of about 0.4degC. What caused this warming that decade. It is unlikely to be related to any significant increase in CO2 emissions and/or reduction in aerosol emissions. How do we know that the forcings that brought about that change were not in play (perhaps to an even greater level since we do not know the upper bounds of those forcings) during the late 1970s to late 1990s?

4. Between about 1908 and 1915 again there is about 0.4degC warming. What caused this warming during this period. Are they the same forcings that were in play during the period 1861 to 1869, or are they different forcings? It is unlikely to be related to any significant increase in CO2 emissions and/or reduction in aerosol emissions. How do we know that the forcings that brought about this change were not in play (perhaps to an even greater level since we do not know the upper bounds of those forcings) during the late 1970s to late 1990s? If the forcings that were operative during the period 1908 to 1915 were different to those that were operative during 1861 to 1869 can all these forcings collectively operate at the same time, and if so How do we know that the forcings that brought about that change were not in play during the late 1970s to late 1990s?

One could go through the entire thermometer record and make similar observations about each and every change in that record. But my point is that until one fully understands natural variability (all its forcings and the upper and lower bounds of such and their inter-relationship with one another), it is impossible to attribute any change in the record to CO2 emissions (or for that matter manmade aerosol emissions). Until one can completely eliminate natural variability, the signal of climate sensitivity to CO2 cannot be extracted from the noise of natural variation. Period!

Absorbing all these "climate sensitivity" estimations, I have the strong impression that whatever this value it will be cancelled out by the energy to produce water vapour.

Claiming we need to know 'absolutely everything' about natural forcings seems a bit strong, but I think richard verney (why the lower case?) is making excellent points, ones which should be considered along with Doug Keenan's search for a statistical model by which to judge recent changes in observables such as temperature (see, for example his recent post here: http://www.bishop-hill.net/blog/2013/5/9/no-let-up-for-the-met-office.html).

The invention of 'external forcings' was a device that allowed programmers to incorporate a long list of otherwise very challenging things to model in GCMs - such as CO2 and aerosols. I am not convinced it has been successful in terms of producing useful forecasts, but I suppose we do not have the computer hardware and software that is adequate yet for more direct modelling on a global scale, let alone the detailed observations that would seem to be required.

Richard

While I think your reasoning is correct, what it boils down to is that we can't say anything meaningful about this area because there isn't enough good quality data.

But this is true of the entire climatological field. A major reason they decided that 30-odd years of warming was a long enough period to declare a crisis was, surely, that they've only got 150 years of data.

Never mind what's in it - 150 years being enough is a fundamental presupposition without which there can be no field of study. The palaeo proxy studies are academically interesting, and probably fun to do, but they appear to fail in the modern era. This again takes us back to the core issue: that the modern era's 150-odd years of poor data is all there is.

The problem is that in insisting 30 years of the latest "trend" were enough to raise a panic, they must logically soon concede that a similar period, with added CO2 but without any warming, allays that panic.

This is an indigestible fact for the warmies to swallow, especially since the people telling them they are wrong are people they hate politically, and to whom they would never willingly concede a point. Without a logical response, they are left with smearing the messenger and closing down the debate.

CAGW strikes me as one of those ideas like alchemy. Alchemists reckoned there was a way to turn lead into gold. There is, but they didn't arrive at that this view by way of an accurate intellectual model. It was simply the ill-informed guess that turns out to be right, not because it was properly thought-out but because they made 1,000 such guesses and of those one or two are bound to be right.

It may be possible to come up with estimates of future climate states, but maybe in about 1,000 years and even then probably to no useful degree of resolution.

Well said, Richard Verney. Saved me the trouble.

I agree RichardV.

It's OK saying EVEN IF we accept that CO2 is a "control knob" for global temperature (overriding everything else) then you're still over-estimating it, but it's a pretty big EVEN IF. There seems to be no evidence of it outside of a lab and I've still not even seen that convincingly. Rhoda has manfully (womanfully?) tried to get her "best evidence" of the CO2 effect in the real world but to no avail.

It reminds me of all those "red wine is good for you" surveys usually followed 6 months later by a "red wine is bad for you" survey. Intuitively, It is just not possible to isolate a single input in such a complex system (in that case, diet) and draw meaningful conclusions. It is easy however to draw erroneous conclusions that support whatever case you are pushing.

I have just read a short book "Thinking statistically" by Uri Bram where he cites "Endogeneity" as a common reason for false statistical inferences. Basically, it is easy to say X is a function of Y +- random error but in truth the error is not at all random and in fact contains all the unknown unknowns that make the original analysis worthless. Several real life examples given in the book.

That seems a reasonable summary of the climate debate with the random error = natural variation plus "well we can't think of anything else" logical crapola. Richard puts it in more detail and more eloquently above.

"Well said, Richard Verney"

Plus 1!

The original IPCC range seems to have been little more than a show of hands from people who stood to benefit handsomely from higher values.

The model-based estimates that confirmed those pre-conceived values (by a combination of circular logic and an aerosol deus ex-machina parameter) were always meaningless but maybe at last that seems to be getting through to some people. The alarmists could hardly continue to keep denying the truth forever but they must still contrive to spin this tiny, benign, and likely natural warming as potentially alarming so they don't lose their jobs.

The IPCC postulate that natural variation can be separated out from mans contribution by using such obviously poor models with even poorer estimates of natural variation is just hype.

That seems a reasonable summary of the climate debate with the random error = natural variation plus "well we can't think of anything else" logical crapola.

As has been said before, in the seventeenth century crop failure was quite obviously the fault of witches. This habit has persisted into the present day, though.

My favourite historical example of this is Enigma / Ultra. In WW2 Germany used an encoding machine so clever it was assumed that for all practical purposes, messages encrypted on it were unbreakable.

When it became clear that signals traffic was being broken and read fast enough for the other side to act on it, ze Chermans faced a choice between two explanations. Either:

1/ The allies had, in total secrecy, built some sort of array of electromechanical devices into which unbroken Enigma messages could be fed, and out of which a decryption key would emerge that would enable the messages to be read. This had been done in total secrecy and without a single leak, as a priority towards which almost unlimited intellectual capital must have been allocated, and it worked fast enough for the intelligence to be useful rather than emerging months later.

or:

2/ they had a rat.

Unerringly, they went for the second, essentially because the first was too complicated to imagine, and hence in reality "well we can't think of anything else" than 2.

And because they were paranoid anyway.

Excellent post, thanks Richard. That's one to keep for future reference.

Please could you clarify something? I had a look at the info on HADCRUT and CRUTEM to get an idea of the background. That shows that there was very little coverage pre-1900 - roughly 10% of the southern hemisphere, for example. I did not find it easy reading so maybe I missed something but, when temperature changes are given for the mid 1800s, how can we be reasonably confident that this was not just a local effect?

I am guessing that the vast majority of reliable records for that period come from Europe. Could it be that, say, a scorching summer in Europe would distort the "global" record?

J4R; they also were unaware - or were not advised - that at least one Enigma machine had been captured. When did they become aware of the decryption? My impression was that it was well hidden; the Allies went to great lengths to avoid an obvious direct response to decrypts.

Apologies for going O/T.

Richard Verney began thus:

Pat Frank wrote this in September 2007:

I agree with Pat and have done since before I read that. But does anyone think that by quoting this I am on topic for this thread?

What Nic Lewis has been doing, as I see it, since his breaktrhough The IPCC’s alteration of Forster & Gregory’s model-independent climate sensitivity results on Judith Curry's in July 2011, is auditing AR4 Chapter 9 - and indeed AR5 Chapter 10, as it emerges. These IPCC people were making some errors, even in their own terms, in using real world data to constrain TCR and ECS and that is really the central part of the WG1 narrative. The resultant paper published yesterday, by some of the biggest names in the game, including the two lead authors of AR5 Ch 10, is a complete game-changer in my view.

So to start with 'bordering on the dishonest' and to keep piling on with the cheerleading for this is to completely miss the tremendous contribution Nic has made here.

May 20, 2013 at 1:16 PM | JamesG

"The original IPCC range seems to have been little more than a show of hands from people who stood to benefit handsomely from higher values."

Actually James the sensitivity was originally in the Charney Report of 1979. It was taken from the work of a chap called Manabe and a chap called Hansen. Manabe estimated that the climate sensitivity was 2C and (surprise surprise) Hansen estimated it at 4C Charney decided a range of error of 0.5C was reasonable so the range became 1.5C - 4.5C with 3C being the likely sensitivity. 34 years and $100bn later the sensitivity is 2C - 4.5C a change made in IPCC AR4.

You don't have to be a scientist to figure out that a sensitivity basically put together on the back of a fag packet that hasn't changed in 34 years is indicating that climate scientists don't understand sensitivity.

Richard, I agree that Nic Lewis has made a tremendous contribution in chipping away at the IPCC and their longings for tablets of stone, but it is still worth keeping the sensitivity issue in perspective. The article by Pat Frank you linked to also has these words in it:

geronimo: close, but not quite. The report is an eye opener though. Thanks for pointing me to it.

http://www.atmos.ucla.edu/~brianpm/download/charney_report.pdf

The Charney report, from 1979. Basically, it had two models, one from Manabe, the other Hansen. The lower bound, from Manabe, was 2 Deg C. The upper bound was 3.5 deg C, from Hansen. They used an uncertainity of 0.5 deg C for the lower bound, and 1 deg for the upper, coming up with a range of 1.5 to 4.5 deg C for a doubling of CO2, with the most likely as 3 deg C.

Climate sensitivity relates the increase in temperature as a result of increase in CO2 in the atmosphere

This assumes increasing CO2 causes increased temperature

AND

the way CO2 causes increasing temperature is a constant.

This assumption seems more than a bit bizarre seeing as for the last 15 years or so temperatures have not risen whilst CO2 levels have continued to rise.

As my current favorite graph shows

www.woodfortrees.org/plot/esrl-co2/isolate:60/mean:12/scale:0.25/plot/hadcrut3vgl/isolate:60/mean:12/from:1958

changes in CO2 follow changes in temperature

A simple explanation for changes in measured "climate sensitivity" is no such thing exists,

there was a short period when CO2 levels and temperatures appeared to rise in step.

This lead to one apparent value for climate sensitivity

SInce temperatures have stopped following rising CO2, lower values of climate sensitivity are found.

It is well known the first result of all research is - more research is needed.

What is the incentive for people to publish there is no such physical effect as is implied by climate sensitivity?

It is clear that the climate sensitivity value, used by IPCC modellers was pure guesswork. We know it, and the established climate science community knows it. So when their projections of temperature versus CO2 levels are shown to be falsified by measurements, why do they look to such an empirically unsupported phenomenon as deep ocean energy storage? Or strange ideas about aerosols? Why do they continue to turn their backs on the scientific method by simply not admitting that they might have made a poor guess?

IPCC First Assessment Report in 1990 predicted a temperature rise of 1C above then current levels by 2025 (Policymakers' Summary, xi). Woodfortrees shows HADCrut4 at a shade over 0.3C up since 1990 with only 12 years to go to 2025. The "target" rise by now was about 0.7C. The uncertainty range in the Policymakers' Summary was stated as 0.2 - 0.5C per decade. The warming of 0.3C that we've seen in the more than two decades since FAR (so <0.15C per decade) falls below the lowest uncertainty band. Panic cancelled.

They're also off on their sea level rise prediction by about 50% as well as far as I can see (quick look only though, happy to be corrected).

The most useful result of the latest round of sensitivity papers is that the credibility of the current round of spaghetti-code GCMs is holed below the waterline.

Maybe they'll find Trenberth's missing heat on the way down.

Now that climate scientists are starting to flee the sinking models, and we're seeing grudging acknowledgement that empirical data matters, it'll be time to look more closely at the quality of that data.

But let's make sure the Good Ship GCM Worship is down with the anglerfish first.

I've said before that just because you can conceptualize a climate sensitivity valid globally on a centennial timescale, it doesn't mean the concept can go without validation, and I'm not aware that anybody has succeeded to validate that conjecture. It's the kind of thing that climate scientists gloss over and hope nobody will notice how inadequate the justification is.

But that doesn't mean this work is valueless. It pretty much does eliminate the possibility that any hypothetical CS can be large enough to worry about. That it does so by circular reasonong is nothing to worry about.

JEM:

Brilliant. I agree the GCMs are on their way down. But the most useful result of these sensitivity papers would be to halt bogus and harmful climate policies in their tracks. I'm not saying that will happen - but the law of unintended consequences can be a wonderful thing.

Richard Verney

let us also not forget that temperature is itself a proxy for heat energy - no account for enthalpy being taken in creating this rather dubious beast known as global average temperature. I dont know what it is that climatoligits practice but science it aint.

Maybe I'm just too cynical to believe that the eco-troughers and their revenue-hungry political puppets will give up that easily.

JEM: They won't give up but they may lose their grip on power. And that will happen - is happening, to my mind - as the stated intellectual foundations of the whole shoddy edifice are seen to be blown apart. Look at how many premier news sources covered this story within 12 hours of the paper being released. Covered it extremely badly in many cases, sure. But the foundations have been dynamited and I think everyone knows it.

@rhoda

the definition of climate sensitivity means there is some fixed number C such that whatever the current temperature and whatever the current level of CO2 a doubling of CO2 will always raise the global average temperature (whatever that is) by C degrees. This is true for all time.

If it turns out that you there is no fixed number C, either because it changes with time or the current temperature or the current level of co2, or anything else

then the work is worthless

and much worse than that it is dangerous

as people are claiming this mythical number can be plugged into computer models to predict (sorry project the future)

and on the basis of that £billions are being needless spent, money which could be usefully spent on something worthwhile

Fervently hope so.

I suppose it's too much to hope for a wave of public revulsion, 'truth and reconciliation' programs and all that.

It'll just be quietly buried, and we'll spend the next three decades getting rid of the nasty little legislative legacies of this mess.

May 20, 2013 at 10:30 AM | richard verney

Damn right and you saved me the trouble, all this Dr. Otto statistical guff stuff misses the damn point - man made CO2 is the point and with all that that entails - no signal is detected, climate sensitivity is not by any stretch..... all about MMCO2, or even natural emissivity of CO2 and therefore is insignificant: thus the 'green agenda' - there is no point.

So, as to which Matt Ridley alludes: what is the point?

"There is little doubt that the damage being done by climate-change policies currently exceeds the damage being done by climate change[...]"

We've been sold a wobbler and done up like kippers.

In reply to Richard Verney, who says:

"The nub of the issue is that it is simply impossible to determine a value for climate sensitivity from observation data until absolutely everything is known and understood about natural variation, what its various constituent components are, the forcings of each and every individual component and whether the individual component concerned operates positively or negatively, and the upper and lower bounds of the forcings associated with each and every one of its constituent components."

I completely agree that we should know all of the very difficult forcings, their relationship to each other, the complete microphysics of cloud formation, etc. I agree that the US and UK government should be funding this basic research, and not every more costly computer runs with data we know isn't yet right.

But surely, in the world we are in -- the political world, that wants to take your money and give it to the corporations that donate to their election (wind and solar firms), or to do the bidding of the powerful environmental lobbies, which today (in the US at least) have the money to run lots of TV ads against politicians who don't do their bidding -- we need to compare model results with actual temperatures, and simultaneously develop models which take into account the realities of actual temperature trends?

Nobody is going to wait for perfection, or at least the near perfection that Richard -- and I -- correctly wish for.

JEM

there is a suggestion that the stupidity may have exceeded its shelf life

http://www.globalpost.com/dispatch/news/kyodo-news-international/130518/eu-dial-back-measures-against-global-warming

Speaking of "stupid", this appeared in USA Today just before the CopanHagen conference.

https://lh3.ggpht.com/-MDjYFA0GVxI/UXYFaou7tiI/AAAAAAAAGU4/1YOm2NPS8B8/s1600/earthday.jpg

Dolphinhead: There are many such suggestions. Still not enough, but thanks.

JEM: I don't think we know how the end of the madness will play out. But I think your "wave of public revulsion, 'truth and reconciliation' programs and all that" may not be a million miles away. The general public are extremely aware of having been sold the dangerous global warming meme and they are also very irritated by energy price increases, without necessarily having always made the right connections between the two. This isn't a phenomenon that will 'go gently into that good night' - and that applies to either side.

I agree that the legislative mess is something else. Who knows on that.

RV

"Until one can completely eliminate natural variability, the signal of climate sensitivity to CO2 cannot be extracted from the noise of natural variation."

Abso-bloody-lutely!

May 20, 2013 at 4:56 PM | nTropywins

///////////////////////////

Absolutely right. When considering whether or not there is some energy imbalance, can one imagine another head of science where the main data sets do not even measure the metric to be investigated!

The most important data set is the ocean temperature/heat content. this is so for many reasons. eg., it measures the correct energy metric, the vast latent heat capacity of the ocean compared to that of the atmosphere, the oceans are the heat pump of the planet and distribute heat from the equatorial regions polewards and with this distribution it also drives air circulation patterns etc. However, this is the weakest data set.

I have spent approximately 30 years studying ship's logs. Bucket measurements are rife with uncertainty and errors. More recent temperature are theoretically more scientific; temperature measurements are obtained by measuring water temperature at the engine manifold, which in turn draws the inlet water at depth. This is not sea surface temperature but will be that of water taken at a depth depending upon the design and configuration of the vessel and whether the vessel is fully laden, partly laden or sailing in ballast and how the Master is trimming the vessel (vessels are usually trimmed to the stern, the engine room is also usually located at the stern). The difference can be quite large say between 4 to 13 metres. So a like for like comparison, with a measurement taken by one ship with a measurement taken by another, is not being made.

Further, commercial interests arise with the operation of vessels. The vessel owner/operator may face claims for underperformance (slow steaming, excessive bunker consumption, failure to properly heat cargo etc) and the possibility of such claims may influence log entries. The crew may record weather conditions as being more adverse than they actually were, currents stronger or waeker, sea temperatures cooler than they were, or possibly even warmer (if an engine is suffering from over heating issues). i am not suggesting that such practices are uniform accross the shipping industry but there is room for abuse by the less scrupulous. All of this means that ship's data has to be viewed with an element of caution.

The oceans are so vast that the relatively few ARGO buoys deployed are unlikely to be representaive. These buoys drift and are therefore not measuring the same stretch of water from year to year. we do not yet know whether this drifting is causing a bias (one way or the other). The data set is very short and who knows how accurately it has been tuned with pre ARGO data sets. It should be seen as a seperate data set and not conjoined with earlier ocean temperature measurements.

There are simply huge margins of errors in all data sets. Given these wide margins of errors, how any climate scientist can suggest a 95% confidence in the conclusions of their research is beyond my comprehension.

richard verney

In addition to the heat content of the oceans, I have often wondered how much of the earth system's energy is manifest in ocean waves, and how the waviness of the oceans responds to changes in energy. It seems to me that this is another way in which the oceans act to buffer energy, but I have no idea what the magnitude might be. I suspect it is substantial.

Climate sensitivity is 'not as bad as previously thought'?

Well before we all celebrate with a BBQ perhaps someone had better tell Fiona Harvey, who is wetting her climate change pants over at Guardian Towers. She's covered Otto and is using such moderate language....

"Climate change: human disaster looms, claims new research.

Forecast global temperature rise of 4C a calamity for large swaths of planet even if predicted extremes are not reached"

Dis-information, much?

http://www.guardian.co.uk/environment/2013/may/19/climate-change-meltdown-unlikely-research

Very good cheshired.

Aye and as is their alarmist wont, they're all bedwetters 'n' all - no doubt.

Re the lovely Fiona, the cut-and-paste queen of alarmism: She obviously has not twigged that most of the public are aware that her 'journalistic' output is seriously flawed and is utterly irrelevant to anything resembling reality.

Random guy:

"Speaking of "stupid", this appeared in USA Today just before the CopanHagen conference."

I think that guy's t-shirt is missing an N.

BTW. are you ever going to tell us what number you have in mind for "lag", or was that just a shoot-from-the-hip response at the bottom of this comment list?

http://www.bishop-hill.net/blog/2013/5/16/lewis-responds-to-nuccitelli.html#comments

This curve fitting exercise discovered: The more decades global temps stay flat or go down, the more climate sensitivity gets less and less.....The whole "work" is a hindcast interpretation.....lets imagine,

global temps went steeply down for a decade, than this hindcast curve fitting would show climate sensitivity being even lower....its only a matter of decade selection....

All this "work" leads to nothing meaningful, for 5 macro-climate forcings were excluded, and one

micro-forcing, the CO2, is grossly inflated, see http://www.knowledgeminer.eu/eoo_paper.html.

JS.