Bishop Hill

Bishop Hill Cook’s consensus: standing on its last legs

Oct 11, 2013

Oct 11, 2013  Climate: Sceptics

Climate: Sceptics This is a guest post by Shub Niggurath.

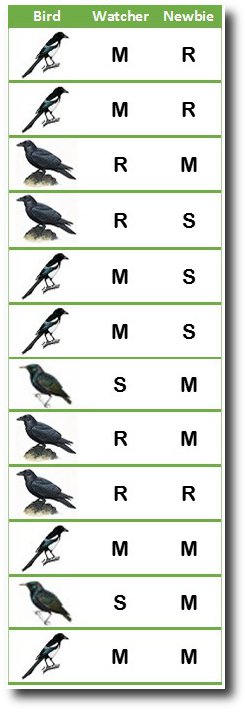

A bird reserve hires a fresh enthusiast and puts him to do a census. The amateur knows there are 3 kinds of birds in the park. He accompanies an experienced watcher. The watcher counts 6 magpies, 4 ravens and 2 starlings. The new hire gets 6 magpies, 3 ravens and 3 starlings. Great job, right?

A bird reserve hires a fresh enthusiast and puts him to do a census. The amateur knows there are 3 kinds of birds in the park. He accompanies an experienced watcher. The watcher counts 6 magpies, 4 ravens and 2 starlings. The new hire gets 6 magpies, 3 ravens and 3 starlings. Great job, right?

No, and here’s how. The new person was not good at identification. He mistook every bird for everything else. He got his total the same as the expert but by chance.

If one looks just at aggregates, one can be fooled into thinking the agreement between birders to be an impressive 92%. In truth, the match is abysmal: 25%. Interestingly this won’t come out unless the raw data is examined.

Suppose, that instead of three kinds of birds there were seven, and that there are a thousand of them instead of twelve. This is the exact situation with the Cook consensus paper.

The Cook paper attempts validation by comparing own ratings with ratings from papers’ authors (see table 4 in paper). In characteristic fashion Cook’s group report only that authors found the same 97% as they did. Except this agreement is solely of the totals – an entirely meaningless figure

Turn back to the bird example. The new person is sufficiently wrong (in 9 of 12 instances) that one cannot be sure even the matches with the expert (3 of 12) aren’t by chance. You can get all birds wrong and yet match 100% with the expert. The per-observation concordance rate is what determines validity.

The implication of such error, i.e. of inter-observer agreement and reliability, can be calculated. In the Cook group data, kappa is 0.08 (p <<< 0.05). The Cook rating method is essentially completely unreliable. The paper authors’ ratings matched Cook’s for only 38% of abstracts. A kappa score of 0.8 is considered ‘excellent’; score less than 0.2 indicates worthless output.

With sustained questions about his paper, Cook has increasingly fallen back on their findings being validated by author ratings (see here, for example). Richard Tol’s second submission to Environmental Research Letters has reviewers adopt the same line:

This paper does not mention or discuss the author self-ratings presented in the Cook et al paper whatsoever. These self-ratings, in fact, are among the strongest set of data presented in the paper and almost exactly mirror the reported ratings from the Cook author team.

The Cook authors in fact present self-rating by paper authors and arrive at 97.2% consensus by author self-ratings.

In reality, the author ratings are the weakest link: they invalidate the conclusions of the paper. It is evident the reviewers have not looked at the data themselves: they would have seen through the trickery employed.

[1] Sim J, Wright C. The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements. Physical Therapy March 2005; 85(3): 257-268. •• A well-cited review that provides good general description.

Reader Comments (137)

Andrew M & Richard Tol,

I'm a graduate of University of Queensland, late 60s. Is there a way in which I can help?

I can create some ways, but there is a chance I'd be counterproductive. Guide me.

I do cringe at the acts of UofQ sometimes, this matter in particular.

Also, I know Jo Nova. I have written an accepted post for her blog & submitted several more. I'm reasonably sure that she would like to publicise as much as she can from both of you, but she is desperately under-resourced. I don't detect any snub.

......................

Shub, your bird count example was beautiful. Thank you.

Should it be useful on a blog like this noted for its eloquent use of the English language, can I let it slip that we once had a beastly boss who was therefore nicknamed "The Count with the silent O". Now how can we work that into bird counts?

................

Australia was represented in the list of greenpeace alleged terrorists in the Russian rig affair. Now they are getting our new Foreign Minister involved to rescue them from the mess they made. I resent this use of taxpayer money. Lovely girl, our Minister Julie Bishop, but tough as nails. I hope she'll advise to grin & Russian bear it. How many Brits were caught?

......................

Did you know that these words were penned by Stefan Lewandowsky when at Uni of West Australia (or around that time)?

"Skepticism is not only at the core of scientific reasoning but has also been shown to improve people's discrimination between true and false information (e.g., Lewandowsky, Stritzke, Oberauer & Morales, 2005, 2009)." From MOTIVATED REJECTION OF SCIENCE NASA faked the moon landing Therefore (Climate) Science is a Hoax: An Anatomy of the Motivated Rejection of Science.

He's now at Bristol, close to the old Lacock Abbey that was occupied by Sharingtons (or ?Sherringtons) ca 1550. This was the location where descendent William Henry Fox Talbot took his first photo (announced in 1839) and so gained a place as one of the 3 main inventors of photography.

...................

Oct 12, 2013 at 12:44 AM | Unregistered CommenterBrandon Shollenberger Sorry Brendan, have to disagree. If people had a category of "unknown", the organisers of data would most likely reject that set and rely on the "known", in which case the bird analogy is apt.

.....................

On Cook, I can't say that a high % of the climate papers I read have a specific statement endorsing the postulate that humans are responsible for more than half of the observed global warming. It would be interesting to point to periods of global cooling like 1945-1970 and ask authors if half of this cooling was caused by man. The Cook methodology shows a confused experimental design that seems, from admission, to have been changed in mid-stream, which is often a bad move when investigating a stated hypothesis. Besides, the alarm buttons glow when you see a 97% agreement in social data, (or a correlation coefficient higher than 0.85 or so). If you get that kind of agreement in social studies, you should look at whether the interpretation has been assisted to a target.

...................

Cheers from Down Under Geoff (Scientist, retired)

Brandon

If you perform a classification exercise, the meaning, or implication of each rating is immaterial. Cook's rating scale has 7 points. There is no valid hierarchy amongst the ratings. If you assume their system works, each abstract or paper can be fit into precisely one single category. Lumping ratings, leaving out some, and other creative steps are useful only to hide meaningful distinctions which exist.

Ruth

You appear to have fallen for a trick Cook et al continually employ in the paper. Their 'endorse the consensus' is not an explicit 'endorsement'. It contains both abstracts that explicitly make statements and those that 'implicitly' support it.

The shallow consensus was chosen as a target for evaluation in the study. The abstracts themselves, as a direct result of the chosen key phrases, contains little information about even this shallow consensus. It is largely an undifferentiated mass of text. The chosen rating scale was *pre-determined*, with little consideration to what information is actually contained in the abstract. As a result of these three factors, the needle wavers wildly - reliable performance is not possible. The authors themselves perform no diagnostic tests to characterize their data.

Ruth Dixon:

At most, this post speaks to the precision of the SkS ratings, not the accuracy. There's no reason to say a proxy is bad because it is more susceptible to noise than another proxy. You cannot "invalidate the conclusions of the paper" by finding one proxy is less noisy than another.

Geoff Sherrington, I believe your response to me supports, rather than disputes, my position:

The majority of the disagreement referred to in this post comes from the difference between SkS raters rating an abstract neutrally and authors rating the paper as taking a position. If one only compared those cases where the SkS and authors took a position, there'd be much less disagreement.

By your phrasing, the majority of the disagreement referred to in this paper comes from SkS members rating things as "unknown." If we filter out that set like you suggest, the results of the test change dramatically. It's only by not doing what you suggest the organizers of the data would do that allows us to get the results found in this post.

Abstract rating will necessarily be less precise than full paper rating, but that lack of precision does not translate into a lack of accuracy. It does not translate into a lack of validity. It does not allow us to dismiss a paper's results.

The results of this test are entirely predictable and completely unremarkable. You would get the same results when comparing any two data sets that have different levels of precision. That's why the test is specifically designed to only be used when comparing ratings of the same thing.

shub:

This is true, but each paper does not have to fall into the same category as its corresponding abstract. As such, you cannot compare ratings of one to ratings of another with the test you're using.

Nonsense. Those steps are useful ways to analyze the data for patterns. It is perfectly reasonable to consider how many abstract ratings for any particularly category match the paper ratings. One certainly might like to know how many abstracts rated as a 1 were "correct" according to the self-ratings. I'm sure if we found every single one contradicted the self-ratings, you'd be promoting the result.

The reality is if one wants to know how much agreement there is in the ratings, what you say is "useful only to hide meaningful distinctions" is actually necessary. In fact, your method is the one which is "useful only to hide meaningful distinctions." You're the one treating the disagreement between "unkown" and anything else as the same as "strong support for AGW" and "strong rejection of AGW."

The only papers which are relevant to the consensus are those which actually tackle the problem of detection and attribution. If they don't directly address that and give reasons for attribution and deal with unknowns and make some attempt to estimate their magnitude, they don't matter. They don't affect the truth of the consensus either way and are not useful as evidence AND IT REALLY DOESN'T MATTER WHO THINKS WHAT ABOUT THEM. It really is non-productive to argue further about the methods or the data or whatever else is wrong with Cook and Lew and the rest of them. We are wasting our time with this nonsense, in my opinion. It doesn't matter. They got the headlines they wanted. We can't turn back the clock.

"Nonsense. Those steps are useful ways to analyze the data for patterns."

Hold on to your own adjectives and psychological assessments.

The ratings are explicitly defined categories. If there is any possibility of their interacting with each other, the chosen system is invalid. This is the point.

I am not militant about the use of kappa. I realised a while ago that the 'AGW community' doesn't use it and I've not seen the measure used in other blog posts. I understand that the entities being compared are not the same. But they should yield equivalent information and this assumption is not mine but the paper's. The kappa works better for the Cook group rating abstracts because they rated each abstract twice. As I mentioned before, they fail this test too. At the larger scale however, there are likely better tests. Secular factors (like fatigue and shortcut-taking) become more significant determinants of reliability when volunteers are rating thousands upon thousands of abstracts.

In unweighted kappa, the disagreement between ratings is indeed considered equal, though they may carry different meaning in the context what's being studied. Purely as a test for evaluation of a rating exercise, however, unweighted kappa is the best measure, most favourable to the system: it serves simply as a measure of distinction between ratings the raters are able to make, without regard to what the ratings themselves mean. Want to try kappa with weightings? It has been done. The Cook system only performs worse.

Look at the data directly. It can be reasonably assumed that greater information in the paper may shift the rating for a position. But can it subtract from the information stated explicitly in the abstract? Apparently, it can!

The Cook group rated 18 papers as "explicitly supporting >50%" position. Of these, authors shifted out 10 papers to categories 2, 3 , 4 and 7. They rated 214 papers as "explicitly supporting with no quantification". Of these, authors moved 55 to category 1. This is fine as you could say these papers had more information about a putative consensus position that was not in the abstract. But they also moved 84 papers out to ratings 3, 4, 5 and 7. On the whole, authors rated 33 papers on the skeptical side. None of these were identifed by Cook and friends as being skeptical. Of these, Cook even rated 10 to support the consensus. How would you explain these?

The simpler explanation is, the rating system doesn't perform the way they say it does.

@Rhoda

I couldn't agree more.

@Geoff

If you could get us a way into the U Queensland Gazette (or whatever it is called) ...

@Shub, Ruth

You seem to assume that when Cook approached the paper authors, the latter went back and re-read their own work before responding. I would think it is more plausible that they thought "what was that paper about again?" for about 10 seconds and then answered. An author's recollection of a paper may be very different from the actual paper.

shub, I have no idea what "psychological assessments" you're referring to, but I didn't make any. I also have no idea why you ask me to explain one set of disagreements when previously you told me separatig data out like that is "useful only to hide meaningful distinctions." Quite frankly, I can't see any connection between most of your latest comment and anything prior.

If you want to talk about other tests, fine. If you want to say other ways of examining the data show problems, fine. I'm not interested. Right now, all I'm interested in is the test discussed in this post. That test is meaningless. You seem to have acknowledged this in your latest response as you acknowledge the problem I highlighted with the test, but I'm not sure.

Please try to focus on what's being discussed. Changing the subject accomplishes nothing, nor does your hostile approach to this discussion. There are two possibilities. 1) Your test was bogus, and you should acknowledge such. 2) Your test was meaningful, and you should demonstrate such.

Demanding I explain things irrelevant to these possibilities is silly. Even if other tests showed a problem, that would not justify using a bogus test. In fact, that behavior is exactly what you criticize Cook et al for doing.

@Tol

I have no illusions how authors must have done their rating. I'm just playing along with Brandon here who believes everything must have happened just as Cook's group describe.

Brandon,

I've seen you starting these types of arguments and dragging them along a few times now. I am not interested in being a part of one. I am not acknowledging anything because you don't seem to understand what's being done. I don't think you have much experience in doing science either. Take care.

shub, your latest comment is pathetic. Not only do you use a petty jab at me to justify taking your ball and going home, you do so while creating a false and insulting impression of me in your comment to Richard Tol. If you want to insult me rather than resolve a simple disagreement, that's your call. I, however, will continue to point out nonsense as nonsense.

The test you used requires the sets compared to be measuring the same thing. The sets you used were not measuring the same thing. You have acknowledged this. As such, any reasonable view of this blog post must hold that it uses a bogus test.

You've defended your use of the test by claiming it is in line with what Cook et al did. You claim the use of abstracts as a proxy for the entire paper means "abstracts=paper." This is incredibly wrong. Proxies are almost always used because they have a meaningful, but not perfect, correlation with what they're proxying. For a proxy to equal what it proxies like you portray, it would need a perfect correlation. This means your formulation is false on its face. Moreover, Cook et al explicitly highlighted differences between abstract and paper ratings. That means their formulation could not be the same as yours.

You responded to pressure on this point by demanding I explain things that had no bearing on these issues. You implied these things somehow prove your original, fallacious test was appropriate because they'd show problems like it did. This is an obvious logical fallacy, one you accused Cook et al of using. It is also a blatant red herring.

During all this, you've been hostile and relied on insults to buffer your case. You have done this despite me consistently refraining from such behavior while making any argument.

It is appropriate you now insult me in a response to Richard Tol. Tol made a comment that contradicted earlier remarks he had made. I pointed out this inconsistency, and he ignored the issue. The implication is what he said was false, and he simply refuses to admit it.

You can insult me all you want. It will not change the truth of anything I just said.

Brandon, not everything other people besides you do is pathetic, incredibly wrong, nonsense, meaningless, completely inappropriate..., hmm... have I missed any pejorative adjectives or adjectival phrases used by you on this thread?

People might reasonably view your comments as belittling and even insulting. People might even reasonably respond to what they view as personal attacks with jibes of their own.

You create a very hostile environment that makes it difficult to have a fruitful discussion with you and it shouldn't be really surprising when people decide not to engage with you.

The discussion about the kappa test, its appropriateness and what can be learned in its application, is an interesting one. It is unfortunate it has become impossible to discuss this on this thread, where such a such a discussion is fully apropos, and instead have to discuss why needlessly bellicose verbiage is unhelpful in furthering discussion.

Carrick +1

Carrick, rather than cherry pick words to paint me as creating a hostile tone, why don't you try providing actual quotes that are out of line? Why don't you comment on the language of the other people posting who regularly use far most hostile language than I do, including the blog post itself? Why don't you do anything other than effectively say, "It's all your fault"?

While I wait for an answer I doubt will come, I'm going to take a minute to look at some of the words you paint as pejorative:

Meaningless - In statistics, a test being meaningless is a common problem. That's why you've called things such many times. For example.

Nonsense - People post nonsense on blogs all the time. It gets pointed out as nonsense all the time, including by you.

Completely inappropriate - Some statistical methodologies and tests are completely inappropriate for some (or even all) situations. There is nothing remarkable about pointing this out. And while I don't know of any cases where you used that exact phrase yourself, you've not been bothered by people in discussions with you using it.

You apparently have no problem with these words when you use them. You apparently have no problem with these words when many other people use them. You apparently only have a problem when I use them.

There are a lot of things one could say about you based on this. I'm not going to say any of them. I don't care about individuals, and I don't care about stupid discussions of personalities. If you have a case to make for poor behavior on my part, make it. Otherwise, all you're doing is chiming in to say, "I'm on that guy's side!"

Which is fine. But please don't pretend like you're siding with someone because I'm such a bad guy. You're not. The case you're presenting against me is weaker than one could present against dozens of people you never criticize for the same things.

dcardno, while you're welcome to express your support for people in their criticisms of me, I must ask: What exactly have I said that was out of line compared to what is commonly said in comments here or what was said in this blog post?

@Carrick

I don't think there is much to discuss, actually.

Either the two data-sets of Cook are comparable, and then the kappa statistic shows that Cook is a fool for claiming that they are the same where they clearly are not; or the two data-sets of Cook are incomparable, and then Cook is a fool for comparing them.

<<I>> ...Please, please can we stop talking about this piece of dreck?... <</I>>

<I> My first thought as well.

This is, of course, politics, not science. I can't see any point in trying to counter it with science. You need to counter it with politics. <I>

Absobloodylutely.

Stop giving the guy oxygen via all this exposure.

Brandon - I can't add much more than what Carrick said; that's why I responded with a "+1" rather than further discussion. I disagree (I suppose obviously) with your assertion that your comments are no more abrasive than what is commonly found here.

Dr Rodgers

The camp is always split between people who want to ignore Cook and his antics and those who don't. One however only needs to remember the Ed Davey interview with Andrew Neil on national television. These guys are adding pounds and dollars to electricity bills on the basis of this stuff.

Richard Tol still refuses to address the fact he's said contradictory things about what he has been told by John Cook. Nobody seems to mind. I find that strange. Oh well. As for what he is willing to say:

A kappa statistic cannot show two data sets are incomparable. All it can ever show is there is some difference between the data sets. The fact two data sets have a difference doesn't mean they're incomparable. Different things are compared all the time.

The strangest part of all this is Cook et al explicitly said there was a difference in the data sets. That means Cook et al should have made us expect the results this test gives. It's difficult to see how one goes from "expected result" to "anyone who didn't see this clearly didn't look at the data" (paraphrased).

dcardno, I see snide remarks in blog post after blog post here. This blog post directly insulted people for not agreeing to a criticism (that's completely wrong). The second comment on this post calls a piece of work "dreck." The proprietor of this blog chimed in to make the joke, "The University of Quackland."

I don't see how calling something "meaningless" could be a sign of poor behavior if all of that is acceptable. I certainly don't see why it should make people find it difficult to discuss simple and substantial criticisms of a test used in this blog post. Even if one feels my tone is horrible, my criticism is still clear and damning. It should be discussed, not ignored.

Sorry realize this is rather long - think it worth putting here- this page is quite old and quiet now :)

Regarding Cook et al acknowledgement of possible rater bias; on re-reading the paper, this part of his concluding analysis leapt out at me (my emphasis):

So it seems Cook et al choose to explain away any under rating of papers by some mystical ESLD effect that must have gripped the boys and girls at SkS. ;)

They even cite a scientific paper that shows how this effect can grip people. Namely:

Climate change prediction: Erring on the side of least drama? - Keynyn Brysse, Naomi Oreskes, Jessica O’Reilly, Michael Oppenheimer

I can see a couple of problems with this. The first one is minor. I.e. the fact Brysse et al is clearly designed to talk about proper working scientists not a bunch of "citizen science" volunteers who happen to mostly come from an activist blog.

The Brysse conclusion describes who it talks about:

Of course it seems typical that the little boys and girls at SkS play act like this, and put themselves in the same category as proper scientists doing "large assessments", so maybe we could let that delusion go? ;)

The worse thing though that struck me is shown in these excerpts:

"[Scientific reticence] may have exerted an opposite effect by biasing raters towards a 'no position' classification."

and

"[Under-counting of endorsement papers] suggests that scientific reticence and ESLD remain possible biases in the abstract ratings process.".

The writer makes these observations with an innocent face, as if pondering some great imponderable that may need some follow up work. Is the writer Cook himself?

One big problem with this pose can be seen by anyone who has read the leaked SkS forum, where this paper is discussed by the main block of raters together. This privileged person would see this "scientific" puzzling over the difference between author and rater difference is at best mysteriously self delusional or at worst clearly bogus.

The fact is that in the private forum Cook himself explicitly makes clear the desired assumptions to be made on bias to his fellow authors and more importantly, to the few raters who do most of the rating in the paper:

John Cook 2012-03-07 11:30:46:

On another occasion when John Cook says:

co-author and fellow mass rater Sarah Green responds :

2012-03-04 04:41:16

The innocent face pose alone; wondering in the paper that ELSD has somehow mysteriously gripped the raters - is enough to make me heave, but seeing rater/author difference *used* like this as part of the "scientific" conclusion seems pretty damn shonky if not paper damagingly deceptive.

Brandon:

If you want the point fairly discussed, wouldn't you want it dispassionately discussed? People shouldn't "win a debate" based on who is able to keep a level head, while the other person is insulting them. I wouldn't personally want threads to get hijacked over the tone of comments I had made. I would feel that I had failed to communicate myself effectively.

This shouldn't be about winning debates, it's not that way for me, it should be about understanding the best method for testing (in this case) the internal validity of assumptions made in the Cook paper. As to this:

Cook is making the assumption that it is appropriate to compare the two data sets when he makes the comparison of lumped rankings. As shub outlines, Cook then uses a very weak method to demonstrate consistency of his own data set. What shub is showing is that at if you use a stronger statistical test, even if you accept the premise that it is appropriate to compare the two data sets, then Cooks conclusions are still wrong.

In other worse, the kappa test is applied here as a test of the internal validity of the paper, and found to fail. You can argue whether this is the best statistic to use (it is overly conservative), but arguing that Cook's assumption of comparability of the two data sets is invalid misses the point of what is being tested.

We're just asking whether the paper is internally consistent or not. (In the software industry, this would be called software verification---does the program do what it purports to do. Software validation would be the equivalent of asking "is the assumption that the two data sets are comparable

In that respect, I don't agree with Richard Tol. There is much to be discussed here. I think part of what needs to be discussed when researchers analyze papers, is what sort of tests get made.

It might be blindingly obvious to you that the data sets aren't the same, but clearly there are plenty of people, Cook, Nuccitelli, all of the reviewers of their paper, the editor of the journal, and many others who don't agree with this conclusion.

That's why it is necessary to show that, even starting with the authors assumptions, the authors conclusions are unwarranted.

Now you can address (non-abusively) if you prefer that Cook didn't make this assumption. I'd have to say in response, he didn't make the assumption until he did, then he did so repeatedly. I would say you would be cherry picking his paper (and other comments he's made since) if you are really claiming that Cook doesn't think the two data sets can be compared.

His claims of external validity rests almost entirely on the comparison of their rankings with the authors. And acceptance of the external validity of the paper, as we've seen from responses to Tol's criticisms, also largely rests of the perceived consistency of Cook's lumped survey results with those of the authors.

Sorry truncated sentence:

We're just asking whether the paper is internally consistent or not. (In the software industry, this would be called software verification---does the program do what it purports to do. Software validation would be the equivalent of asking "is the assumption that the two data sets are comparable appropriate?")

@TLITB

Thanks for that.

Note that Cook has repeatedly refused to make public the results of his comparison of raters.

@Richard Tol Oct 14, 2013 at 6:38 PM

Yes I have learnt from this article illustration, and from what I am learning from all of you Pacific RIm Jaegers and Kaijus here (lol) and I think that seems the most interesting thing to see now. See how the raters themselves compare.

FWIW, for the record, I now see clearer what Ruth Dixon and Brandon Shollenberger say on the issue of comparing the rater abstract rating to author rating. Also looking into kappa (thanks wiki) it does seem there is too much differences in the categories being compared to make it meaningful. Especially, as Ruth DIxon points out, the Cook paper itself makes it explicit they expect differences:

I think there may need to be some Hegelian synthesis on the approaches here ...

Meanwhile my twopenneth :)

The Cook et al overriding approach is that they clearly assume there is a great yummy neutral pool that can be dipped into and they can then pull out a consensus plum at any time.

This seems to be the underlying SkS forum assumption, and their spin in public too. That they can blame ESLD for any shortfall.

For example notice they don't bother to tell you that at least 10% of their consensus papers were later change to be self-rated as neutral. Or heaven forbid say that the number of reject papers in the self-rated group, though still a small number, tripled! ;)

I am sure Cook et al would think those things boring observations to make, but to my mind, if this was a dry scientific paper, instead of an exciting call to action paper, they should also mention these differences.

All these differences maybe not a kappa calculations but a layman can see them when pointed out. Cook make merry on the 53.8% number being rescued from the neutral pool by the authors themselves - Cook seem to pose and celebrate their conservative approach by emphasising this "found" 53.8% - but yet then remain silent on any other observation of the same vein. This strikes me as an obvious omission.

What you get from Cook et al is a very shallow conclusion with really all the effort directed at the post-production special effects and spin in the media.

Their spin is egregious, but it is just spin. As a layman I can accept that it may be perfectly adequate for publication in peer review lit. pool that all the other scientists swim in.

It is up to scientists who swim in the same pool to care about this piss.

Some obviuosly don't - I notice Michael Mann retweeted author Dana Nuccitelli saying his paper and the Hockey stick are brothers in arms. Keep it up I say. This paper is never going to get better under scrutiny. And those that want to ride it's tail should pile on now :)

Maybe that is a hope for the scientific process? ;)

Leopard

The authors were handed the same rating scale the volunteers used.

It is understood that the prevalence, (i.e., distribution) of different ratings can impact the value of kappa. There is a correction that can applied to account for this. But the problem only would negatively affect Cook as the most prevalent category is '4', i.e., no position. '4' is the category that gives rise to '3' and '5' (as in, if you squint hard at a 'no position' abstract, you can see support for AGW)

Yeah know all that. In fact I am thinking of going on Mastermind and having this paper as my specialist subject ;)

The Leopard in the Basement:

We all agree that Cook admits there are differences, but we should all also agree he then goes on to assume that, even with this acknowledged issue, that it is appropriate to statistically compare the two samples. In other words, you must consider this statement of the authors in the context of the paper in which it was written.

If you argue that it is a set-in-stone premise of Cook et al. that the data sets are not statistically compatible, then you will have a hell of a time explaining the basis for his making the comparison, and especially explaining why anybody, especially just about anybody who has endorsed this craptastic paper, lists this statistical comparison as one of the elements of the paper:

If I were to criticize the kappa statistic, it would be because it is an "overly conservative" metric. I wouldn't criticize somebody for testing the internal validity of a paper.

Anybody with a research background knows that "we do this" and why. But that's why I disagree with Richard Tol there is nothing much to talk about here. If you aren't in research, perhaps internal validity is not something you think about on a day-to-day basis.

@Carrick

Picking up your subtle nudges on "research" didn't want to bug you so found the origin of this quote, myself:

This was in a (not sure which) reject letter to Richard Tol's criticism.

This does indeed sound like an ideal case for pointing out it is wrong. Using something like kappa maybe?

But which came first?! That defending statement or Cooks' paper?

I really don't think I'll need a "hell of a time" to say it was *after* Cooks* paper ;)

Carrick:

I do. That's why I discussed it dispassionately. For some reason, you've simply painted dispassionate comments of mine that used the same wording people (including you) regularly use as inappropriate. And then after insulting me with that, you've chosen to ignore my disputation while while suggesting insults are bad.

You're basically just begging the question here. Your question is predicated upon me being out of line (and in fact, you directly imply I've been insulting people), but I've disputed that, and you've done nothing to rebut my disputation. That means you're refusing to allow a chance for defense against your criticism while repeating it - begging the question and upon argument by assertion.

Your response is a non-sequitur. It fails to rebut what I said. It instead ignores what I said. As I clearly stated in what you responded to, the fact there is a difference in two data sets does not mean they're incomparable. Data sets with differences are compared all the time. It's perfectly normal.

You say "clearly there are plenty of people... who don't agree" with me, but there is no basis for this. All we can reasonably conclude is those people accept data sets that are different can be compared.

I clearly think Cook thinks the two data sets can be compared. In fact, I clearly think the two data sets can be compared. I don't know why you'd raise counterarguments to hypothetical points I might raise when I've clearly indicated the opposite of those points.

Quite simply, you've done nothing to address any argument I've raised. You've ignored my defense against your criticisms of me while repeating those criticisms. You've ignored the justification I offered for comparing the data sets while focusing on the idea they cannot be compared. Your responses are effectively non-sequiturs.

And to be clear, I'm saying that dispassionately and without abuse.

Brandon

Do you believe the author self ratings and volunteer ratings should not be compared with kappa, among other reasons, because the proportions of the ratings are different in both? Is that your contention?

Since you missed this point before:

Consider the following. You say above:

I agree with this. It is possible.

But is the reverse, possible? i.e., is it possible for an abstract for explicitly 'endorse' a position while the paper itself only implicitly does so?

Always wondered where "Cooking the books" came from.

shub:

Yes.

The differences in what was rated ensured the proportions could never be the same. At the very least, we'd expect abstract ratings to have more neutral ratings simply because abstracts have less information than full papers. That's akin to have two proxies with different amounts of noise. Using a kappa test in such a situation is silly as the test would find a large amount of difference regardless of whether or not the underlying signal is the same.

I didn't miss that before. As I responded when you raised the issue before, I don't care to discuss that. It has no bearing on what I said. Nothing I've said suggests Cook et al's ratings were well done. Their data could be complete garbage. That wouldn't change the fact the test you used was inappropriate and your interpretation of its results was wrong.

It'd be useful to examine how the paper ratings compare to the abstract ratings. That might show any number of problems. It might show the Cook et al data set is terrible. It just won't show the test used in this blog post had any validity.

Your proposition/hypothesis is that abstracts have more noise than full papers. And that they have "less information than full papers".

Then you say: "I don't care to discuss that" to findings that go against your hypothesis.

...........................................................

Let me tell where *I think* you are going wrong, and where you and I diverge in terms of evaluating this:

You are evaluating ratings and their implications both, at the same time. Just as Cook did. I say the rating scheme be evaluated independent of what the different ratings mean. Which is why you don't find anything wrong if ratings are collapsed.

If the 'true rating' of a paper (i.e., it's content) had an impact on what it was rated as, the ratings could only move in one direction, i.e., in the direction of greater information.

Leopard, see table 4 of the paper, which clearly relies on the ability to compare the two data sets, since this is what it does. The appearance of weasel words doesn't subtract from the much heavily weighted appearance of the assumption this table.

The quote I included was clearly labelled as a post facto quote by a referee. In the context that people viewing table 4 as a external validation of the paper, it is a completely reasonable thing to say "look...this is an overly weak test that was performed. If we use a more robust test, that conclusion cannot be drawn even if we errantly accept the premise."

Brandon thanks for the response.

So to be clear, you accept that 1) Cook assumes the data sets are inter-comparable and 2) that he made an inter-comparison in Table 4, correct? Further I assume you understand the concept and purpose of tests of internal validity.

What I got out of your discussion here here was really the inappropriateness of making comparisons between the two data sets. In particular this:

This shows a bit of a misunderstanding on your part though---kappa measures level of agreement, not accuracy.

If one is looking at abstracts only, and the other at full papers, that means they measuring different things and aren't really statistically inter-compatible. However, this speaks to the external validity of the paper: Based on your argument (and others such as Richard Tol), in my opinion, neither Cook's test nor shub's test should be used here. That is, Cook's assumption of the assumption of inter-comparability of the two data sets was itself flawed, so the comparison between the rankings from this study and the authors was completely meaningless.

Beyond that, your discuss becomes more about the overly conservative nature of kappa, which doesn't undermine its use here or elsewhere, but merely limits its applicability:

The way I would put it is, if you get strong agreement, the kappa statistic is informative. Low values of kappa can occur even when there is agreement between the two data sets, so low values of kappa are non-informative.

As I see it, there isn't anything ridiculous about using kappa to test for agreement between the two data sets, and your criticism that "That makes the test in this post completely meaningless" is wrong… had he found agreement, the results would had been meaningful. As to "this post is nothing but a test applied in obviously wrong way", you were arguing above that it was the wrong test, not wrongly applied. You need to watch these bombastic statements—they are fun to write, but my suggestion is remove them from the final copy.

I had one question here: You say:

I would view your description involves a mis-application of the kappa statistic. I think the proper thing to do is view "unknowns" as non-responses (so they don't enter into P(e)). I'd be interested in others' opinions on this.

I think I need a bit more nuance here:

Suppose we assume that the raters and authors rankings measured the same thing, and both were accurate. Then you'd get a high value of kappa.

Suppose they were both accurate, and the claim was made that they were inter-compariable. You can use kappa to test this hypothesis. A high value of kappa would indicate consistency with this hypothesis. Unfortunately a low value of Cohen's kappa would be non-informative.

In either case, application of kappa is meaningful, because in both cases you are hypothesis testing. It is only in the case, where there is no plausible hypothesis that the two data sets are inter-comparable, that shub's test would be an erroneous test to apply.

Carrick: Spot on

Carrick, you say:

Yet I specifically said kappa would show disagreement regardless of the data's accuracy. Why would you assume I don't understand what kappa shows when I described exactly what you describe?

First, shub drew strong conclusions based upon his test you acknowledge is non-informative. That means even if one disagrees with how strongly I made my criticisms, the general thrust is still correct. Second:

Prior to looking at the data, we know the data sets measured different things. That means we know the kappa scores will be low. The test cannot possibly tell us anything because we know in advance the kappa scores could never show agreement. It was a test guaranteed to give us a non-informative answer. That makes it meaningless.

As for me saying the test was applied in an obviously wrong way, you're arguing semantics. It is reasonable to say applying a test to a situation it should not be applied to is applying the test in a wrong way. People can reasonably feel part of applying a test properly is applying it to an appropriate situation.

One can apply filters prior to doing kappa testing, but that is not an inherent aspect of the test. As such, my description is exactly in line with what shub described for his post.

That said, one of my criticisms has been the differing levels of noise in the two data sets. That manifests primarily in the differing levels of "unknown" ratings. Filtering out those ratings would go a long way toward addressing the problem.

No. If there is an a priori reason to know the data has differences that will affect the kappa score, such as differing levels of noise, shub's test is meaningless. A test which is guaranteed to give you a particular answer regardless of the hypothesis you're testing is a meaningless test.

And to reiterate, even if one doesn't feel this test was meaningless, shub's interpretation of his results was wrong. That means the conclusion of this blog post is wrong, and the insult he made in it is unjustifiable.

shub:

Say what? You argued there are rating pairs that show mistaken ratings. That doesn't contradict the idea the two data sets have differing levels of noise. It doesn't say anything about it. It has nothing to do with anything I've said.

Assuming the abstracts and papers were clearly and properly written, sure. I have no idea why you're focusing on this issue though. It doesn't justify your kappa test in any way.

In fact, you're now acknowledging ratings could "move" between the data sets. That means you're acknowledging the ratings are not expected to be the same between the two. That invalidates your usage of the kappa test. If we know a priori the data pairs will not be the same, a test which shows those data pairs are not the same is meaningless. It tells us nothing we didn't already know.

You're tacitly acknowledging the test you used could never tell us anything we didn't know prior to the test. I don't see any way you can continue to defend this blog post.

Brandon, forget about kappa. I don't care about you editorial content either. Set those aside and let's us take one step at a time.

Is it possible for an abstract to explicitly 'endorse' the consensus (rating 1) and the whole paper to be one of 'no position' (rating 4)?

An abstract with rating 1 has information, i.e., it contains a explicit statement about the IPCC AGW consensus. Its corresponding paper, which presumably contains all information and claims made in the abstract, if assigned a rating of 4, would contains lesser information than the abstract.

Would this be possible? How would you explain it?

Remember, the basis for your rejection of kappa is that the two entities, abstracts and papers, contain different information and that the greater information present in the paper may not be in the abstract thus producing a legitimate discrepancy between the two which would affect the kappa adversely.

shub:

Why would I "forget about" the aspect of the blog post I've been discussing all along? Why should I suddenly and randomly discuss something else entirely, something that wasn't even mentioned in the blog post?

If you can give me a decent answer to those questions, then I'll "forget about kappa." Otherwise, I intend to keep focusing on what is actually discussed in this blog post.

I have no idea why you tell me to remember this. It has nothing to do with the issue you raised. The fact a legitimate discrepancy would exist says nothing about whether or not illegitimate discrepancies would exist.

I am asking you to discuss the basis for your rejection of the use of kappa.

I have. Many times. I can discuss it more. I can repeat myself. What I cannot do is discuss the basis for my rejection of the kappa test by forgetting about the kappa test and discussing something that has no connection to the basis for my rejection of the kappa test.

I decided to do a quick demonstration of why I say the test used in this post was meaningless. For it, I'm going to make a few assumptions to simplify things. First, every non-neutral rating is perfectly accurate. That means nobody made any mistakes. Second, the self-ratings and abstracts were perfectly representative. That means we can scale them to match each other, and the only difference will be in the amount of "noise."

I'm also going to lump together all "endorse" categories and all "reject" categories. This will decrease the amount of disagreement we'd expect to see, but for a simple example, it should be suitable. With that said, we have these self-ratings from the Cook et al paper:

The scaled abstract ratings would be:

Because of our assumptions, every non-neutral abstract rating will agree perfectly with the self-ratings. That means the only difference comes from abstracts rated neutrally where their corresponding paper states a position. This means the data can be represented as a 3x3 matrix:

Which is basically just a contingency table showing pairs of ratings. The simple, unweighted kappa for this table is 0.44. That is far short of the 1.0 we'd get if the data matched exactly, and it happens despite every rating being perfectly accurate.

0.44 is obviously not as low as the 0.08 reported in the blog post, but it shows the problem with the test. With perfectly rated data and three categories instead of seven, the test used by this blog post shows a large amount of disagreement. The blog post says:

But it was never possible for the data to have a kappa score or 0.8. We know in advance the test could never show "excellent" agreement simply because of the differing levels of "noise" in the data sets. It doesn't matter how good or bad the SkS group's ratings were: This test would show large amounts of disagreement either way. That means when the blog post says:

It is basing that claim on a test that could easily give a "failure" even if the data used by Cook et al was perfect.

Brandon, Brandon, Brandon, Brandon, Brandon, Brandon, Brandon

Weighted kappa's reach the same conclusion (although weighted kappa is defined differently than you suggest). The test you do is a test of equality of proportions. That's a different test. It also rejects the null that the two sets of results are the same.

Brandon

"We know in advance the test could never show "excellent" agreement simply because of the differing levels of "noise" in the data sets."

With respect to the Cook paper, no, we don't 'know' this. In fact, if we 'knew' this, the study would be invalid, even without consideration of its attempt to validate its results. The starting assumption, on the other hand, is that though there may be some 'noise' (your words) the abstracts are an useful approximation of the papers and thus 'the literature' (Cook's words). I accept this assumption. (to show how it doesn't hold).

A kappa of 0.8 is 'excellent' by convention. The more your kappa tends toward that figure the better things are. The information deficit problem with rating abstracts is part of the starting assumption of the study itself and is pre-analytic to a test carried out to validate it. You cannot pull out ground assumptions and modify them in any validation step. (which is what Cook does, as you do).

Your numerical example is not useful as it is just a numerical restatement of your hypothesis. Why don't you look at the real data to see if behaves the way you say it does? When I try to explain it, you say 'not interested'.

You say: "That means the only difference comes from abstracts rated neutrally where their corresponding paper states a position.". Your 3x3 table doesn't seem to bear this out? What are the labels on the rows and columns?

Richard Tol says:

I'm at a loss as to what this is supposed to mean. I didn't say a word about weighted kappa so I couldn't have suggested any definition for it. In fact, weighted kappa has nothing to do with anything I said.

I also have no idea how the "test you do is a test of equality of proportions." Why is this the test anyone does, and what is it even for? I did a simple calculation of what we'd expect the kappa score to be for Cook et al's data given a few assumptions. Richard Tol's response has no apparent relevance to it.

The example I used demonstrated we would not expect high kappa scores from Cook et al's data set if their ratings were done perfectly. I explained every assumption used and described every step taken for it. If there's a problem in anything I said, it should be easy to point out.

As far as I can tell, Richard Tol hasn't. It appears he has instead used a haughty tone while saying things that have no connection to anything I've posted.

shub:

People like Carrick may claim this is a horrible thing for me to say, but...

Nonsense. Complete and total nonsense. The fact we know a kappa test is inappropriate for a situation in no way invalidates anything. Kappa scores do not indicate inaccuracy.* All they indicate is a lack of agreement. Lack of agreement across different measurements does not invalidate conclusions.

Suppose one has two thermometers. Both thermometers are perfectly accurate, but one's margin of error is +/- .1 degrees while the other's is +/- .2 degrees. A kappa score calculated for the data from these thermometers would show disagreement. Despite the thermometers being completely accurate, the test would show disagreement simply because the level of noise was not the same across both data sets.

Claiming a low kappa score invalidates Cook et al's conclusions is like saying the thermometer with a margin of error of +/- .1 degrees invalidates any temperature series created with the two thermometers because it doesn't agree exactly with the less precise thermometer.

You've never done anything to suggest the "real data" doesn't behave the way I say it does. You've simply highlighted a different aspect of how the data behaves and claimed it refutes my claim. That's nonsense. Data can show many different types of behaviors. I'm "not interested" because you're insisting on discussing a red herring.

The 3x3 table I made is the (contingency) table you'd make when doing a kappa calculation using the numbers I listed. Columns are abstract ratings, rows are paper ratings. 1 indicates endorsement, 2 indicates neutrality, 3 indicates rejection. Creating the table from my listed numbers is simple arithmetic, and anyone who wants to verify the kappa score I got from it can plug it into pre-built calculator, like this one.

*A point I've made many times despite Carrick's claims that I didn't understand it.

I've decided to make a visual demonstration of the point I've been making all along. Look at this graph. Do the two data sets in the graph show the same underlying signal? Definitely. Do they show a very high level of agreement? Not at all.

Both are representations of a simple sin wave. One is quite precise (accurate to integer rounding). The other is less precise. Despite representing the exact same signal, the two data sets will have a low kappa score. That is akin to having two data sets with differing levels of noise. A low kappa score for them doesn't invalidate anything.

The same is true for the Cook et al data. A low kappa score for it doesn't invalidate anything about the paper. A low kappa score is expected because the rating sets measured different things. This is obvious as the authors even highlighted a difference which would lead to a low kappa score.

"Suppose one has two thermometers. Both thermometers are perfectly accurate, but one's margin of error is +/- .1 degrees while the other's is +/- .2 degrees. A kappa score calculated for the data from these thermometers would show disagreement. Despite the thermometers being completely accurate, the test would show disagreement simply because the level of noise was not the same across both data sets."

You are correct.

But we are dealing with categorical variables, not continuous ones.

If you take the same thermometer data and fix cutoffs that demarcate 'high', 'medium' and 'low' temperatures, and if the error in one of the thermometers makes its values swing across the cutoffs, it would be an accurate thermometer with lower precision and the kappa would be low.