Bishop Hill

Bishop Hill Continental hindcasts

Apr 7, 2012

Apr 7, 2012  Climate: Models

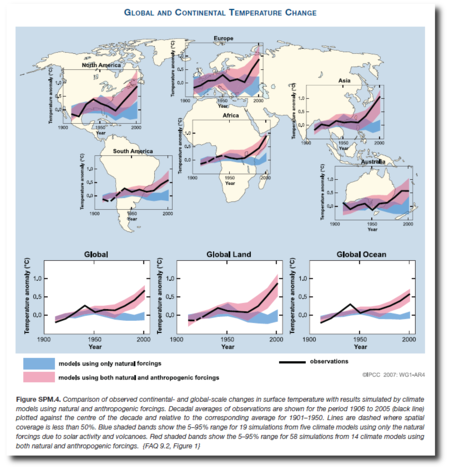

Climate: Models I recently emailed Richard Betts, inquiring about evidence that climate models could correctly recreate the climate of the past ("hindcasts") at a sub-global level. Among other things, Richard pointed me to FAQ 9.2 from the IPCC's Fourth Assessment Report, a continental-scale comparison of model output with all forcings (red band), natural-only forcings (blue band) and observations (black line). This figure also appears in the Summary for Policymakers as SPM 4. There is a similiar analysis at subcontinental level in the same chapter of the report.

For now I'm going to focus on the continental-scale analysis. (Click for full size)

This on the face of it looks like reasonable evidence of some hindcast skill for a group of climate models, at least as far as temperatures are concerned (Richard says the skill is much less for precipitation, say).

This on the face of it looks like reasonable evidence of some hindcast skill for a group of climate models, at least as far as temperatures are concerned (Richard says the skill is much less for precipitation, say).

Surprisingly for such an important finding, this seems to have been put together especially for the Fourth Assessment Report rather than being based on findings in the primary literature. (Richard has also pointed me to some papers on the subject too, and I'll return to these on another occasion.)

With a bit of a struggle, it is possible to find some details of how FAQ9.2 was put together: see here. The detail is quite interesting and leaves me wanting to know more. For example, here's how the model runs were chosen.

An ensemble of 58 “ALL” forcing simulations (i.e., with historical anthropogenic and natural forcings) was formed from 14 models.[...] An ensemble of 19 “NAT” forcing simulations (i.e., with historical natural forcings only) was formed from 5 models. See Note 1 below for the list of simulations. Models from the multi-model data archive at PDMDI (MMD) were included in these ensembles if they had a control run that drifted only modestly (i.e., less than 0.2K/century drift in global mean temperature).

This immediately raises the question of why there are so many more models behind the red "ALL" band than the blue "NAT" band. Surely you would want to have the same models in the two bands. Otherwise you'd have an apples-to-oranges comparison, wouldn't you?

Also, I'm struck by the sharp warming shown in each and every continent. I had always believed that the majority of the warming was in the Arctic, but perhaps I am mistaken.

Lastly, I have a vague idea that there is some history behind this figure - did Tom Wigley do a figure like this once or is my memory deceiving me?

Note: This post is about sub-global hindcasts. Comments on radiative physics or other off-topic subjects will be snipped.

Reader Comments (94)

Where is the flat fifteen years again? You know, the ones that actually happened? Let's only use the ones in the ensemble that got that bit right.

And then again, this really says nothing, other than that the set of assumptions we use includes a lot more 'man-made' than 'natural' forcing. Got any measurements to back that up?

Making predictions is easy, especially about the past.

You can hindcast anything with a careful choice of variables and parameters. But the models involved in hindcasting have no predictive capability whatever, as has been pointed out many times.

It's hard to make predictions, especially about the future.

Figure 9.12 in WG1 Chapter 9 shows equivalent natural/natural+CO2 comparison for four regions of the Arctic (Alaska; Canada, Greenland, Iceland; Northern Europe; Northern Asia) and the post-1975 upticks are indeed a bit steeper than further south.

(Does anyone know why all the natural-only model runs find a temperature decline since about 1950?)

Why are the error bands, on land and at sea, directly proportional to the number of temperature measurements?

There are more records in Europe and North America than anywhere else, but here the temperature is more uncertain that the oceans, where we have bugger all.

Do they really believe that they can determine the temperature better in South America than in North America?

The difference in the size of the error estimates show were are dealing with illusionists, and not statisticians.

Something Wicked This Way Comes.

Yes, lots of "history" behind this one...

... way back in 2009.

Try:

http://wattsupwiththat.com/2009/11/29/when-results-go-bad/

and

http://wattsupwiththat.com/2009/12/08/the-smoking-gun-at-darwin-zero/

One of our leading Swedish Skeptics was involved too ...

... in interrogating Trenberth :-)

I was surprised that Richard Betts wants to hang out this dirty laundry again.

Docmartyn -

The error bands are significantly wider in Europe and North America because the simulations had a far wider spread for those regions. See figure S9.1 in Appendix 9D.

Antartica doesn't seem to get a mention.

Isn't being allowed to choose which models and model runs to include just a fudge factor?

In April last year I blogged on a similar theme which seems relevant.

That particular article can be found here if anyone's interested.

The article is concerned with the question of validation and describes a horse-racing system which purported to select winners based on their performance in about 20 "key" races. Needless to say that while the "supporting evidence" showed a healthy profit in each of the previous five years when put to the test it failed miserably.

Presumably the creators of this system would now be able to provide the same rubbish 10 times as quickly as they did 40 years ago using a computer model but the end result would be the same.

Which goes to prove that if the will is there the "evidence" can be produced but what we don't know is whether that evidence is in any way meaningful or whether further adjustments will be necessary to the system to enable us to sell it to some unsuspecting mug in time for next season!

It also goes to prove that there is nothing new in making the facts say what you want them to say.

If you develop a model with a number of different climate forcing mechanisms and then remove some of those forcings, it is self-evident that the model results will be different. The changes are clearly an artifact of the modelling procedure regardless of whether the model algorithms are valid. The results could only be used as evidence of anthropogenic changes if you believe that the climate models adequately represent all the global processes affecting climate. And even the IPCC consider that they do not.

Peter Stott of the Met Office published a paper on this in 2010 and claimed to have found "fingerprints" of AGW which I commented on here:

http://ccgi.newbery1.plus.com/blog/?p=272

The error bands are significantly wider in Europe and North America because the simulations had a far wider spread for those regions. See figure S9.1 in Appendix 9D.

Apr 7, 2012 at 2:38 PM | HaroldW

------------------------------------------------------

Layman's possibly stupid question.

If I ask ten people the way to a town and they point in several different directions then why would I think that if I had asked just two people that their averaged direction would be any more accurate than the averaged ten?

i.e. everything else being equal, if you have less data to start with how is that somehow more accurate than if you have more?

Shouldn't more data, IF the simulations are anything like useful, lead to a MORE conclusive result?

Why is considered skilful the replication of a temperature anomaly (with huge error bars) even if the actual values are several degrees of the mark?

Regional biases in this paper indicate departures of up to a 400% from measured values:

Temperature and humidity biases in global climate models and their impact on climate feedbacks

http://193.10.130.23/members/viju/publication/airscomp/2007GL030429.pdf

What about the other variables?

Climate is not only temperature, precipitation can be far more important, yet we never hear a single word about successful hindcast of precipitation

"Off the mark"

For an interdependent assessment, see Figures 12-13 of "A comparison of local and aggregated climate model outputs with observed data" in http://dx.doi.org/10.1080/02626667.2010.513518 or in http://itia.ntua.gr/978/

An interesting discussion of this paper can be found in http://dx.doi.org/10.1080/02626667.2011.610758 and the reply in http://dx.doi.org/10.1080/02626667.2011.610759

"Docmartyn -

The error bands are significantly wider in Europe and North America because the simulations had a far wider spread for those regions. See figure S9.1 in Appendix 9D.

Commenter HaroldW"

Bollocks. Absolute, complete bollocks.

It just so happens that their simulations are worst where we have the best records to test them against, and work best where the record is scant?

Can you imagine any other field where such arguments would be accepted?

No, it really isn't.

If you design a set of models which are designed such that parameter X dominates the response, and then change parameter X, then you get a materially different output. In this case, parameter X is the anthropogenic forcings.

That is the only evidence these hindcasts present. We have no way of knowing if the blue bands are anything other than fairy tales.

I can write a computer program with all of the planetary data, orbital mechanics, etc., known to man, and hindcast the Tunguska metor strike in Russia in 1908. I can then run the program forward and predict that a large meteor will strike Basingstoke, on 24th June 2015, just after tea time (causing millions of pounds worth of improvements,,,).

An absurd example, obviously, but the same principle applies - for complex systems, with a large and uncertain number of variables, numerical simulations can never be validated by hindcasting.

Looking at the error bars for natural again, these people purport to have the range down to about 0.2 degrees. What supreme arrogance makes them think that is all there is? That they understand natural forcings (to use their objectionable term) so well?

The models are designed on the assumption that observed temperature rises in the latter part of the 20th C are due to increases in anthropegenic CO2 emission. Clearly omitting this assumed causative factor from tuned models will lead to an underprediction.

This exercise underlines the foolishness of belief in models, when the impact of cloud variability, solar output and ocean currents etc. are so imperfectly understood and when the Climate Community refuses to discuss their implications.

From WG1: I was always under the impression that the models hindcast showed the temperature higher than the actual measured temperature, and that there was an assumed "missing" aerosol forcing that was put into the models by the modellers. Is this no longer true? Anyway have a look at some of the commentary from AR4 WG1 and you can see that the outputs are a long way from just putting the known forcings in and seeing what comes out, there's a lot of tweaking.

Richard, Tamsin and others are clearly convinced their models are accurate, with maybe some errors. While I'm left with the nagging doubt that models programmed by people who believe CO2 increases temperature by more than twice the natural forcings will show that CO2 increases temperature by more than twice the natural forcings.

I know this imay be controversial but modelling, of all non-linear chaotic systems is futile, you cannot model the climate. I'd bet my pension that if we offered to double the grant money for anyone who could disprove these models we'd be knee deep in plausible refutations within a couple of years.

"Fingerprint studies that use climate change signals estimated from an array of climate models indicate that detection of an anthropogenic contribution to the observed warming is a result that is robust to a wide range of model uncertainty, forcing uncertainties and analysis techniques (Hegerl et al., 2001; Gillett et al., 2002c; Tett et al., 2002; Zwiers and Zhang, 2003; IDAG, 2005; Stone and Allen, 2005b; Stone et al., 2007a,b; Stott et al., 2006b,c; Zhang et al., 2006). These studies account for the possibility that the agreement between simulated and observed global mean temperature changes could be fortuitous as a result of, for example, balancing too great (or too small) a model sensitivity with a too large (or too small) negative aerosol forcing (Schwartz, 2004; Hansen et al., 2005) or a too small (or too large) warming due to solar changes. Multi-signal detection and attribution analyses do not rely on such agreement because they seek to explain the observed temperature changes in terms of the responses to individual forcings, using model-derived patterns of response and a noise-reducing metric (Appendix 9.A) but determining their amplitudes from observations. As discussed in Section 9.2.2.1, these approaches make use of differences in the temporal and spatial responses to forcings to separate their effect in observations."

I guess if you can vary the forcings you'd be a tosspot of the first order if you couldn't hindcast accurately. Wouldn't you?

Same comment as on previous blog

The IPCC admits that our knowledge of many aspects of the climate system is poor or in practice non- existent. Therefore, the model output is irrelevant.

Running ensembles of lots of wrong models does not make the output right.

The climate establishment is obsessed with hosing billions of $$ into models rather than doing something useful like better field research or promoting tranprency of data.

I direct Mr Betts to the ravings of Hansen over at WUWT to see what models have achieved.

Regards

Paul

Geronimo: "I guess if you can vary the forcings you'd be a tosspot of the first order if you couldn't hindcast accurately. Wouldn't you?"

Exactly!

Another suggestion

Perhaps the Met Office should allocate some staff to doing what Stephen Goddard does at Real Science. That is study what actually happened according to the numerous historic records dating back to the Egyptians rather than guessing what happens with models, paleofiddling and Hansenesque adjustments to actual thermometer records.

Cheers

Paul

My understanding of the modelling process, from many years of fluid dynamic model experience, used hindcasting as part of the validation process. In other words the basic physical understanding was used to construct the model, which inevitably had a number of default values for variables wher we didn't know the real value but usually had a general idea of the range within which it would be found. The model was then used to hindcast and the various variable adjusted until the hindcast model agreed with the previously observed data. THE MODEL WOULD THEN BE RUN IN PREDICTIVE MODE and compared again to the observed data, if there was agreement everyone cheered if not, the usual situation, it was back to the drawing board to study the underlying physics.

Thus the ability of models to hindcast satisfactorily is of little value without the subsequent demonstration of its ability to forecast. All models that have undefined variables can be made to hindcast satisfcatorily but this is relatively meaningless. A stopped clock can be shown to be correct twice every 24hrs but this does not mean it has any 'skill' in telling the time.

Vincent Courtillot started off one of his Heartland Lectures with these regional graphs. Starts at 2 minutes in.

http://www.youtube.com/watch?feature=player_embedded&v=IG_7zK8ODGA#!

[Calm down please]

Is this the same definition of "accuracy" as the railways' definition of "punctuality"? Anywhere within about 15 minutes will do. In my world if it's got errors it ain't accurate.

http://davidappell.com/AR5/FODS/WG1AR5_FOD_Ch05_Figs_Final_highres.pdf

fig. 5.9

Something Wicked This Way Comes! #2

Yes, there is a "history" ...

... way back in 2009.

Try:

http://wattsupwiththat.com/2009/11/29/when-results-go-bad/

(This is with our own Swedish Skeptic interrogating Kevin Trenberth)

and

http://wattsupwiththat.com/2009/12/08/the-smoking-gun-at-darwin-zero/

I am surprised that Richard Betts wants this dirty washing aired again.

But then ... maybe he doesn't know about it ... hmm ... possible.

PS Catchpas getting difficult to read.

Maybe a computer program might help... oh, wait ;-)

Arthur Dent (Apr 7, 2012 at 5:31 PM)

'if there was agreement everyone cheered if not, the usual situation, it was back to the drawing board to study the underlying physics.'

For the GCMs, is it back to the underlying physics, or back to an array of adjustable parameters for a bit of tweaking?

When hindcasting, are the model runs monitored as they proceed, and if so, are runs which are clearly deviating well away from the target values discarded? (instead of a cheer, perhaps a little groan as the operators get back to the tweaking?). Are the published runs merely those which survived this hypothesised trial and error process? In other words, do we only get to see the runs which produced the most cheers?

Ah, the blue and the red bands...

Am I being dense here or what?

The exercise is supposed to be a comparison of observations versus computed values.

From what I have seen (and I have seen quite a bit) even short term (<24 hour) atmospheric models regularly diverge horribly from reality when using sparse data. Subjectively this is particularly noticeable in something I follow on a professional basis - the northern jet stream. The prediction of the jet stream as I understand it involves multiple model runs to weed out the plain insane and then the forecaster cherry picks what looks probable/comfortable as a scenario. Several forecasters are honest enough to volunteer the number of runs - but never the spread of starting conditions or final values of discarded outcomes.

The profusion of runs for the red band can mean only one thing.

We still have much conjecture about what actually drives the climate, sparse data points and what appears to be a complex and chaotic (in the mathematical sense) system and with multiple (political) assumptions plugged into the models - this strikes me as a plainly contrived and convoluted exercise in confirmation bias.

The usual litany of comments, some verging on the anti-scientific -

"Where is the flat fifteen years again?" "no predictive capability whatever" "dealing with illusionists, and not statisticians" "just a fudge factor" "Bollocks. Absolute, complete bollocks" "anything other than fairy tales" "supreme arrogance" "foolishness of belief in models" "you cannot model the climate" "This is absolute and indefensible dishonesty" "fraud".

Commenter's posts in the various threads about models on this site seem to think that the models live in a world of their own and that, for instance, they are the only way that climate sensitivity is derived. Climate sensitivity is also determined by analysis of the instrumental record, paleoclimate data, climate response to volcanoes, response to the solar cycle etc. These all give roughly the same range and most likely sensitivity. To say that everything is based on just models is wrong.

The current slowdown in global temperature rise is just a result of short term natural variations (with maybe some help from aerosols). We are still within the error range of the models. Heat is currently building up in the oceans. This heat isn't going to magically disappear. CO2 in the atmosphere is increasing and things are going to get hotter. You can't get around it.

Also on the subject of model accuracy. I see no mention of sea level rise being right at the top end of IPCC predictions. Or September Arctic sea ice extent actually being below the IPCC's worst case scenario.

Geronimo: "I guess if you can vary the forcings you'd be a tosspot of the first order if you couldn't hindcast accurately. Wouldn't you?"

Exactly!

Apr 7, 2012 at 5:19 PM | Roger Longstaff

Yes exactly.

I tried to have a quick look at roughly what the aerosol forcings were. The 2 related website links at the bottom of this doc went to ipcc external sites, one of which I didn't have permissions to see and the other was a ~personal page which I probably wouldn't expect to stick around quite a few years later. Surely the reference links should be hosted on the same site. As this doesn't really refer to any other literature directly you haven't a clue where the graphs come from or why these 'ensembles' were chosen.

Rob Burton: "I tried to have a quick look at roughly what the aerosol forcings were..."

According to table S9.1, forcings were not the same for the different models. And 25 out of the 50 "all" runs did not have a forcing corresponding to indirect aerosol effects. [I don't know why the table only indicates 50 model runs when it's quite clear elsewhere that there were 58.] Some didn't account for black carbon, others for ozone, and others for land use.

I don't know whether the net forcings are significantly different from each other or fairly similar. Perhaps someone has made such a comparison?

Would it be possible to just use one model and just change the assumed CO2 'forcing' term?

Isn't that what Hansen did here: http://thedgw.org/definitionsOut/..%5Cdocs%5CHansen_climate_impact_of_increasing_co2.pdf?

This is an example of a prediction in climatology which can be compared with subsequent observation.

"Richard, Tamsin and others are clearly convinced their models are accurate, with maybe some errors."

Mike, it's more like believing that our kids are getting more intelligent because the number of A****s goes up every year.

While Richard, who seems a cheerful enough cove, is telling us that the models are reasonably accurate, and that the Met Office forecast the current hiatus in warming, presumably from the models, he must have more than a little faith in their veracity. If they did forecast it the only place they announced it must have been in the Met Office canteen, and then only with Richard present. I've wondered what Post Normal Science was about, but have found one possible characteristic of it in the climate science community. When something they haven't forecast occurs (a seemingly daily occurence) they say, quick as a flash, "We forecast that." You see "post", "normal" means "hindsight forecasting". I realise they've been doing it with the weather for years, but nobody put the cost of petrol, air travel and energy to justify it for the weather so we haven't noticed.

The problem is that they don't seem to have any version control, so they can update, say the temperature records, and make 2010 the hottest year two years after the prediction that it would be, and then discard the data that for the past 14 years had shown 1998 as the hottest year. Now that's the simple end of things, what about the models? I don't know whether they use software engineering disciplines to develop and maintain the software on the models. Or for that matter who wrote them and where the documentation is, but what we can see is Trenberth telling us that the models aren't even aligned with the current observed state of the climate and Richard saying they can forecast weather 10 year's out with a 60% accuracy. So can you and I by the way, without models, I am forecasting a hot summer and colder than average winter for 2022.

I know the Bish warned him off, but I do miss my daily dose of mdgnn's back radiation rant.

We have been through all this several times. Models cannot be used for prediction. To argue that they can simply proves that you are suffering from serious conceptual confusion. Hindcasting is predicting the past and models cannot do hindcasting. However, models can be tuned to reproduce the past. After all, that is what models do, reproduce important aspects of reality. How anyone believes that the use of models to reproduce the past is something more than sophisticated massaging of existing graphs is beyond comprehension.

I am just an engineer with 50 years of work behind me but there is something not quite right about those graphs.

First, there is no mention of the year they started their regression from.

Second, what do the graphs actually show? Is it the actual output of the model running in reverse or some 'adjusted' output?

Third, if it is some adjusted output what were the adjustments and why? After all, if their models are as good as they say they are there shouldn't be any need of adjustments when running in reverse.

Forth, if the output of the models does not match the actual real world physical measurements when running in reverse it is time to scrap the models, admit they don't work and try and build something based on real physical measurements. That way, when they next produce results - maybe ten years hence - their results might better represent the real world.

From what I have seen of the output from climate scientists there is no way I would let them anywhere near a real world engineering project.

I think it rather telling that here we have continental (and sub continental) scenarios modeled without any indication at all in the "executive summary" of the sampling density or the actual geographic bounds of the data sets used - surely a fairly straightforward thing to achieve with all the public money being hosed into this endeavor?.

As far as I can see - this massive broad brush approach flies directly in the face of the huge regional weather systems which predominate the climate in various parts of the globe. Trying to average these out is an understandable impulse - but the averaging goggles supplied by AR4 look more like reality substitution spectacles.

Climate advocacy is what it is - or maybe it's a marketing exercise?

Anivegmin:

Welcome to the site:

You appear to be a smidgeon over excited, but nontheless you make some good points, or at least points that can be addressed and discussed>

It was me who said you can't model the climate, and you call that "anti-scientific". Whereas I would say it's anti-scientific to tell people you can model a coupled non-linear chaotic system. If you go on to then say you can tell the future using the models of coupled non-linear chaotic systems, you've moved from being non-scientific into practising astrology, or should that be modelology.

On the issue of climate sensitivity I would be interested if you could cite the papers that derived the 3.3C sensitivity using volcanoes, paleoclimatology etc. It seems an awfully difficult thing to do historically given the huge gaps we have in our knowledge of the parameters and variables of past climate. There is only one way of deriving climate sensitivity with any accuracy, in my view at least, and that is to measure it, and the measuring systems have only been available since 1979, and then not for the whole period. In fact Lindzen and Choi did just that and found that the increased heat was causing increasing OLR, but instead of being delighted at finding out where the "lost" heat was going Dr. Trenbeirth and his associates in the Team were outraged. I don't know whether this outrage was because they didn't want the heat to escape, or because they have a paper in the pipeline explaining how the heat had managed to avoid the Argo buoys and hidden itself in the deep oceans and didn't want this fascinating theory to be, if you'll excuse the pun, dead in the water.

"The current slowdown in global temperature rise is just a result of short term natural variations (with maybe some help from aerosols)."

Can you cite the papers that have found the short term natural variations, I know that there was a theory that it was the Chinese burning coal that caused the cooling, but that, excuse the pun again, seems to have gone on the back-burner as the sheer ludicrousness of the suggestion became obvious. Aerosols appear to be the anti-matter to CO2 in the world of climatology. If we get warming it's because of CO2 and if we get cooling it's because of aerosols. In fact they're so useful they've been injected into the hindcast model outputs to reduce the past warming showing up their.

"Also on the subject of model accuracy. I see no mention of sea level rise being right at the top end of IPCC predictions. Or September Arctic sea ice extent actually being below the IPCC's worst case scenario."

Where do you get your information on sea surface temperatures? Tell us what the sea level rise is and how soon the current rate will get us into eco-disaster territory.

Gotta go now, sorry, but look forward to your replies, will look in tomorrow.

If you take 25 bingo numbers at one second intervals from the bag, I can derive a mathematical formula that will correctly (within reasonable error limits) produce a plot of value versus time. However, it will not predict the next number, well there is a 1 in 65 chance. This is not a million miles away from the development of the GCMs.

Quite wrong, anivegmin. My views that the model output on 30-year scales are "fairy tales" are based on objective scientific analysis of model output. The models have no predictive skill on a continental scale outside of the annual seasonal cycle, clearly explained in the paper (link):

Anagnostopoulos, G. G., D. Koutsoyiannis, A. Christofides, A. Efstratiadis, and N. Mamassis, A comparison of local and aggregated climate model outputs with observed data, Hydrological Sciences Journal, 55 (7), 1094–1110, 2010.

DocMartyn has it - these are not 'error bars' or standard errors that everyone is used to.

They are the population of run results trimmed down by 10% to get rid of stragglers. This 90% represents roughly 4 standard deviations of the population (Assuming that the ensemble results are part of a population and not simply random meaningless drivel).

The population of these model runs has a mean (which is not shown in the charts) and if we repeated the ensemble again we would get another population with a slightly different average. If we kept doing this we would end up with bunch of estimated means. The standard deviation of this bunch of estimates is known as the standard error.

The more runs you have in each ensemble the more reliable your estimate of the ‘true’ mean should be. This reliability increases with the square root of the number of samples you have, i.e. your estimate doesn’t get 100x better with 100x more runs but just 10x better. The reliability of your mean is also likely to be better if your results are very similar to each other i.e. they have a low standard deviation.

So the standard error of a mean can be guessed at by: The standard deviation of the underlying observations divided by the square root of the number of observations. (The square root of 58 is approx. 8)

So the height of one standard error bar would be only 1/4 * 1/8 of the height of the population shown in the graphic.

If we ran more batches of 58 runs, we would expect 95% the batch means to fall within 4 standard errors of each other.

So if you wanted a band that hopefully covered 95% of the ensemble means i.e. Some kind of confidence interval for the means of the ensemble, you would make it 4 standard errors high i.e. about an eighth of the height shown.

I hope that my crude stats do not offend.

Apr 7, 2012 at 8:30 PM geronimo

In a previous thread, Richard Betts wrote:

An organisation, whose core business is prediction, that thinks this use of the word "predict" is acceptable, is a disgrace.

In that thread, I said

Unless there were Met Office memos dated 1998 that said "Note to executive committee: Our model predicts no significant warming for the next 15 years." then they DID NOT predict warming in any meaningful sense of "predict".

Me too. I can't make much sense of what he bangs on about but it is never obviously nonsense and he does write with an air of knowing what he is talking about - in contrast to, for example, the gobbledegook in "Slaying the Sky Dragon" which is instantly recognisable as such.

Oops. In my reply to anivegmin (currently in moderation), I link a document already linked by Dr Koutsoyiannis above. I missed that when I first posted!

@Martin A.

I, too, get the same feeling about mdgnn. He reminds me of okm. They both appear to be knowledgeable and very intelligent.

Mostly they get tolerated and ignored but I've yet to see a sufficiency of engagement with their views on blogs.

What if they are off-base and correct while our perpetual musings about models and graphs are just numerical squabbles about angelic population-densities?

I have always wanted to “hindcast” previous model predictions. I have an increasing suspicion that if we checked “our” progressive performance to forecast the temperature of this planet, we would find that our ability is diminishing?

No need to check parameters, error bars, forcings, population-densities or 95% probabilities etc. Just simply are the latest decadal predictions more accurate than their predecessors and if not why not? Especially as we have had decades of constantly increasing resource and experience.

I suspect that they are not and by a significant variance. I have asked RB for a chronological review of the MO’s “Decadal Forecasts” since their inception.

http://www.bishop-hill.net/blog/2012/3/14/climate-hawkins.html#comments

It will be interesting to see how the long-range forecasts have actually panned out over the years.

Martin A writes:

"An organisation, whose core business is prediction, that thinks this use of the word "predict" is acceptable, is a disgrace."

Absolutely! On that meaning of 'predict', anything that falls within the error bars is a prediction that is confirmed. To use the word 'predict' meaningfully, you must specify some event that you are predicting and specify the conditions in which it will occur. You cannot simply say "Give or take ten."

Vinny Burgoo: 14:19.

The reason why the 'natural-only runs' predict cooling is because the aerosol optical physics in the climate models is wrong.

Martin A writes:

"An organisation, whose core business is prediction, that thinks this use of the word "predict" is acceptable, is a disgrace."

Absolutely! On that meaning of 'predict', anything that falls within the error bars is a prediction that is confirmed. To use the word 'predict' meaningfully, you must specify some event that you are predicting and specify the conditions in which it will occur. You cannot simply say "Give or take ten."

The failure of modelers to engage in a debate about reasonable standards for prediction says all that you need to know.